<- previous index next ->

Almost all Numerical Computation arithmetic is performed using

IEEE 754-1985 Standard for Binary Floating-Point Arithmetic.

The two formats that we deal with in practice are the 32 bit and

64 bit formats. You need to know how to get the format you desire

in the language you are programming. Complex numbers use two values.

older

C Java Fortran 95 Fortran Ada 95 MATLAB

------ ------ ---------------- ------- ---------- -------

32 bit float float real real float N/A

64 bit double double double precision real*8 long_float 'default'

complex

32 bit 'none' 'none' complex complex complex N/A

64 bit 'none' 'none' double complex complex*16 long_complex 'default'

'none' means not provided by the language (may be available as a library)

N/A means not available, you get the default.

IEEE Floating-Point numbers are stored as follows:

The single format 32 bit has

1 bit for sign, 8 bits for exponent, 23 bits for fraction

The double format 64 bit has

1 bit for sign, 11 bits for exponent, 52 bits for fraction

There is actually a '1' in the 24th and 53rd bit to the left

of the fraction that is not stored. The fraction including

the non stored bit is called a significand.

The exponent is stored as a biased value, not a signed value.

The 8-bit has 127 added, the 11-bit has 1023 added.

A few values of the exponent are "stolen" for

special values, +/- infinity, not a number, etc.

Floating point numbers are sign magnitude. Invert the sign bit to negate.

Some example numbers and their bit patterns:

decimal

stored hexadecimal sign exponent fraction significand

bit in binary

The "1" is not stored

| biased

31 30....23 22....................0 exponent

1.0

3F 80 00 00 0 01111111 00000000000000000000000 1.0 * 2^(127-127)

0.5

3F 00 00 00 0 01111110 00000000000000000000000 1.0 * 2^(126-127)

0.75

3F 40 00 00 0 01111110 10000000000000000000000 1.1 * 2^(126-127)

0.9999995

3F 7F FF FF 0 01111110 11111111111111111111111 1.1111* 2^(126-127)

0.1

3D CC CC CD 0 01111011 10011001100110011001101 1.1001* 2^(123-127)

63 62...... 52 51 ..... 0

1.0

3F F0 00 00 00 00 00 00 0 01111111111 000 ... 000 1.0 * 2^(1023-1023)

0.5

3F E0 00 00 00 00 00 00 0 01111111110 000 ... 000 1.0 * 2^(1022-1023)

0.75

3F E8 00 00 00 00 00 00 0 01111111110 100 ... 000 1.1 * 2^(1022-1023)

0.9999999999999995

3F EF FF FF FF FF FF FF 0 01111111110 111 ... 1.11111* 2^(1022-1023)

0.1

3F B9 99 99 99 99 99 9A 0 01111111011 10011..1010 1.10011* 2^(1019-1023)

|

sign exponent fraction |

before storing subtract bias

Note that an integer in the range 0 to 2^23 -1 may be represented exactly.

Any power of two in the range -126 to +127 times such an integer may also

be represented exactly. Numbers such as 0.1, 0.3, 1.0/5.0, 1.0/9.0 are

represented approximately. 0.75 is 3/4 which is exact.

Some languages are careful to represent approximated numbers

accurate to plus or minus the least significant bit.

Other languages may be less accurate.

/* flt.c just to look at .o file with hdump */

void flt() /* look at IEEE floating point */

{

float x1 = 1.0f;

float x2 = 0.5f;

float x3 = 0.75f;

float x4 = 0.99999f;

float x5 = 0.1f;

double d1 = 1.0;

double d2 = 0.5;

double d3 = 0.75;

double d4 = 0.99999999; The "1" not stored

double d5 = 0.1; in binary

} |

31 30....23 22....................0 |

3F 80 00 00 0 01111111 00000000000000000000000 1.0 * 2^(127-127)

3F 00 00 00 0 01111110 00000000000000000000000 1.0 * 2^(126-127)

3F 40 00 00 0 01111110 10000000000000000000000 1.1 * 2^(126-127)

3F 7F FF 58 0 01111110 11111111111111101011000 1.1111* 2^(126-127)

3D CC CC CD 0 01111011 10011001100110011001101 1.1001* 2^(123-127)

63 62...... 52 51 ..... 0

3F F0 00 00 00 00 00 00 0 01111111111 000 ... 000 1.0 * 2^(1023-1023)

3F E0 00 00 00 00 00 00 0 01111111110 000 ... 000 1.0 * 2^(1022-1023)

3F E8 00 00 00 00 00 00 0 01111111110 100 ... 000 1.1 * 2^(1022-1023)

3F EF FF FF FA A1 9C 47 0 01111111110 111 ... 1.11111* 2^(1022-1023)

3F B9 99 99 99 99 99 9A 0 01111111011 1001 ..1010 1.10011* 2^(1019-1023)

|

sign exponent fraction |

subtract bias

decimal binary fraction / decimal exponent IEEE normalize

binary

Now, all the above is the memory, RAM, format.

Upon a load operation of either float or double into one of the floating point

registers, the format in the register extended to greater precision

than double. All floating point arithmetic is performed at this

greater precision. Upon a store operation, the greater precision is

reduced to the memory format, possibly with rounding.

From a programming viewpoint, always use double.

exponents must be the same for add and subtract!

A = 3.5 * 10^6 a = 11.1 * 2^6 1.11 * 2^7

B = 2.5 * 10^5 b = 10.1 * 2^5 1.01 * 2^6

A+B 3.50 * 10^6 a+b 11.10 * 2^6 1.110 * 2^7

+ 0.25 * 10^6 + 1.01 * 2^6 + 0.101 * 2^7

_____________ ______________ ------------

3.75 * 10^6 100.11 * 2^6 10.011 * 2^7

normalize 1.0011 * 2^8

IEEE

A-B 3.50 * 10^6

normalize 0.10011 * 2*9

- 0.25 * 10^6 fraction

-------------

3.25 * 10^6

A*B 3.50 * 10^6

* 2.5 * 10^5

-------------

8.75 * 10^11

A/B 3.5 *10^6 / 2.5 *10^5 = 1.4 * 10^1

The mathematical basis for floating point is simple algebra

The common uses are in computer arithmetic and scientific notation

given: a number x1 expressed as 10^e1 * f1

then 10 is the base, e1 is the exponent and f1 is the fraction

example x1 = 10^3 * .1234 means x1 = 123.4 or .1234*10^3

or in computer notation 0.1234E3

In computers the base is chosen to be 2, i.e. binary notation

for x1 = 2^e1 * f1 where e1=3 and f1 = .1011

then x1 = 101.1 base 2 or, converting to decimal x1 = 5.5 base 10

Computers store the sign bit, 1=negative, the exponent and the

fraction in a floating point word that may be 32 or 64 bits.

The operations of add, subtract, multiply and divide are defined as:

Given x1 = 2^e1 * f1

x2 = 2^e2 * f2 and e2 <= e1

x1 + x2 = 2^e1 *(f1 + 2^-(e1-e2) * f2) f2 is shifted then added to f1

x1 - x2 = 2^e1 *(f1 - 2^-(e1-e2) * f2) f2 is shifted then subtracted from f1

x1 * x2 = 2^(e1+e2) * f1 * f2

x1 / x2 = 2^(e1-e2) * (f1 / f2)

an additional operation is usually needed, normalization.

if the resulting "fraction" has digits to the left of the binary

point, then the fraction is shifted right and one is added to

the exponent for each bit shifted until the result is a fraction.

We will use fraction normalization, not IEEE normalization:

if the resulting "fraction" has zeros immediately to the right of

the binary point, then the fraction is shifted left and one is

subtracted from the exponent for each bit shifted until there

is a non zero digit to the right of the binary point.

Numeric examples using equations:

(exponents are decimal integers, fractions are decimal)

(normalized numbers have 1.0 > fraction >= 0.5)

(note fraction strictly less than 1.0, greater than or equal 0.5)

x1 = 2^4 * 0.5 or x1 = 8.0

x2 = 2^2 * 0.5 or x2 = 2.0

x1 + x2 = 2^4 * (.5 + 2^-(4-2) * .5) = 2^4 * (.5 + .125) = 2^4 * .625

x1 - x2 = 2^4 * (.5 - 2^-(4-2) * .5) = 2^4 * (.5 - .125) = 2^4 * .375

not normalized, multiply fraction by 2, subtract 1 from exponent

= 2^3 * .75

x1 * x2 = 2^(4+2) * (.5*.5) = 2^6 * .25 not normalized

= 2^5 * .5 normalized

x1 / x2 = 2^(4-2) * (.5/.5) = 2^2 * 1.0 not normalized

= 2^3 * .5 normalized

Numeric examples, people friendly:

(exponents are decimal integers, fractions are decimal)

(normalized numbers have 1.0 > fraction >= 0.5)

x1 = 0.5 * 2^4

x2 = 0.5 * 2^2

x1 + x2 = 0.500 * 2^4

+ 0.125 * 2^4 unnormalize to make exponents equal

-----------

0.625 * 2^4 result is normalized, done.

x1 - x2 = 0.500 * 2^4

- 0.125 * 2^4 unnormalize to make exponents equal

-----------

0.375 * 2^4 result is not normalized

0.750 * 2^3 double fraction, halve exponential

x1 * x2 = 0.5 * 0.5 * 2^2 * 2^4 = 0.25 * 2^6 not normalized

= 0.5 * 2^5 normalized

x1 / x2 = (.5/.5) * 2^4/2^2 = 1.0 * 2^2 not normalized

= 0.5 * 2^3 normalized

halve fraction, double exponential

IEEE 754 Floating Point Standard

A few minor problems, e.g. the square root of all complex numbers

are in the right half of the complex plane and thus the real

part of the square root should never be negative. As a concession

to early hardware, the standard define the sqrt(-0) to be -0

rather than +0. Several places the standard uses the word should.

If a standard is specifying something, the word shall is typically used.

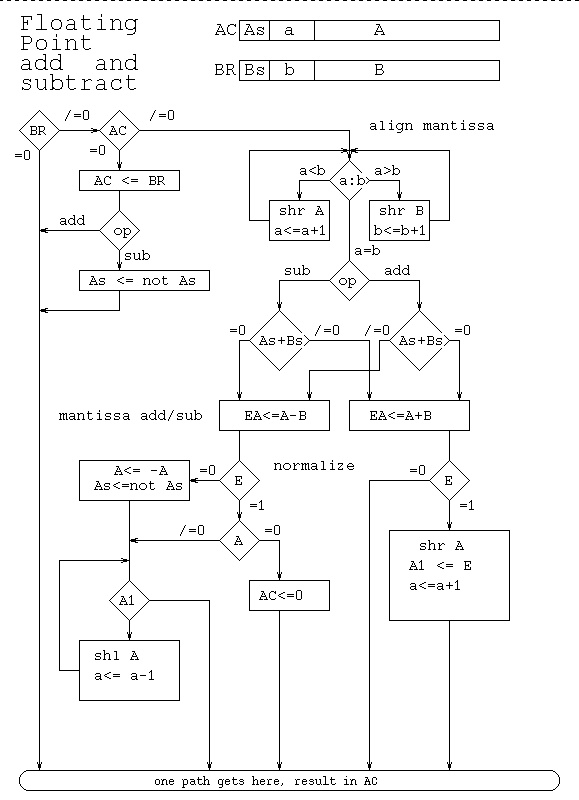

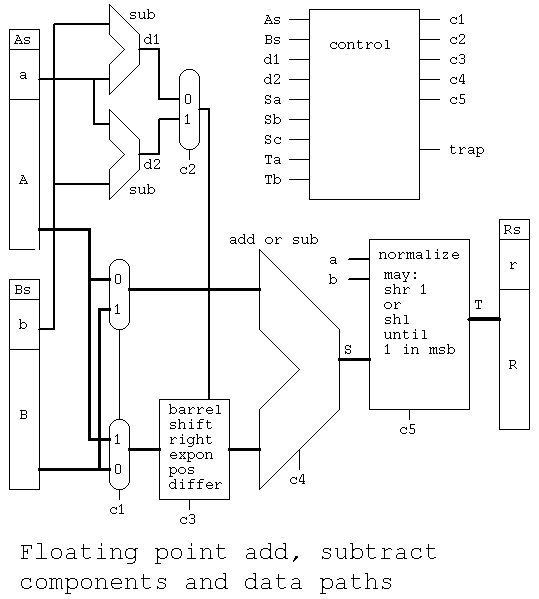

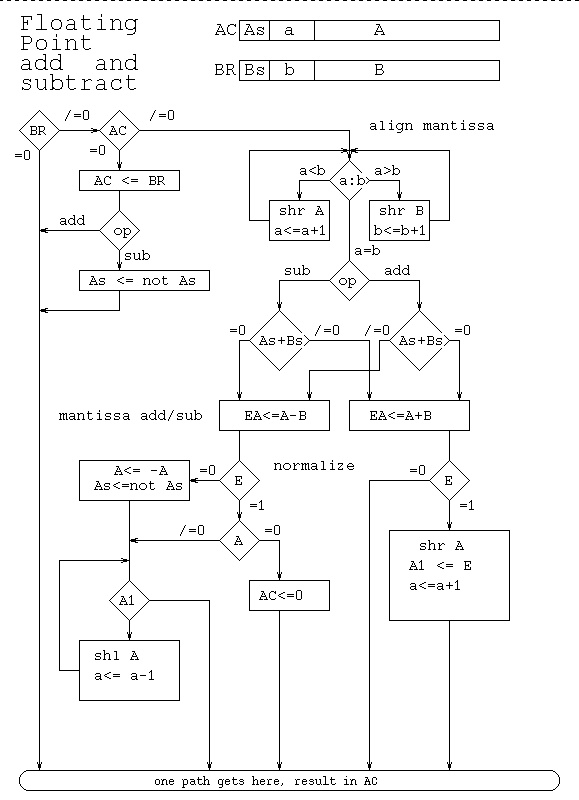

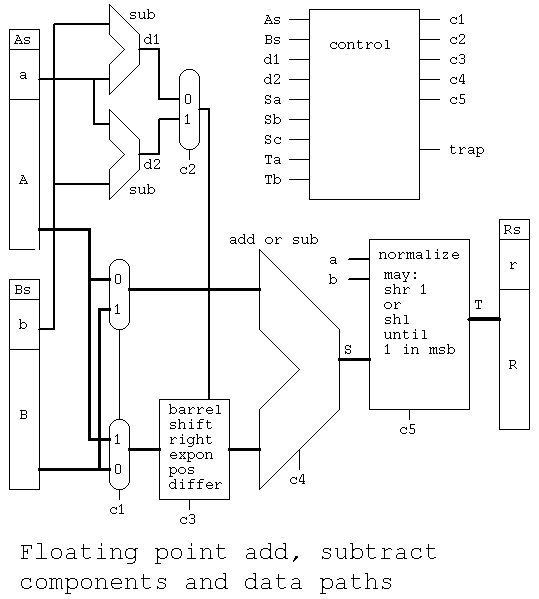

Basic decisions and operations for floating point add and subtract:

The decisions indicated above could be used to design the control

component shown in the data path diagram below:

The decisions indicated above could be used to design the control

component shown in the data path diagram below:

A hint on normalization, using computer scientific notation:

1.0E-8 == 10.0E-9 == 0.01E-6 == 0.00000001 == 10ns == 0.01 microseconds

1.0E8 == 0.1E9 == 100.0E6 == 100,000,000 == 100MHz == 0.1 GHz

1.0/1.0GHz = 1ns clock period

Some graphics boards have large computing capacity and

some are releasing the specs so programmers can use the

computing capacity.

nVidia example 2007

512-core by 2011, more today

Programming 512 cores or more with CUDA or OpenCL is quite a challenge.

New languages are coming, not optimized yet.

Fortunately, CMSC 411 does not require VHDL for floating point,

just the ability to manually do floating point add, subtract,

multiply and divide. (Examples above and in class on board.)

A hint on normalization, using computer scientific notation:

1.0E-8 == 10.0E-9 == 0.01E-6 == 0.00000001 == 10ns == 0.01 microseconds

1.0E8 == 0.1E9 == 100.0E6 == 100,000,000 == 100MHz == 0.1 GHz

1.0/1.0GHz = 1ns clock period

Some graphics boards have large computing capacity and

some are releasing the specs so programmers can use the

computing capacity.

nVidia example 2007

512-core by 2011, more today

Programming 512 cores or more with CUDA or OpenCL is quite a challenge.

New languages are coming, not optimized yet.

Fortunately, CMSC 411 does not require VHDL for floating point,

just the ability to manually do floating point add, subtract,

multiply and divide. (Examples above and in class on board.)

<- previous index next ->

The decisions indicated above could be used to design the control

component shown in the data path diagram below:

The decisions indicated above could be used to design the control

component shown in the data path diagram below:

A hint on normalization, using computer scientific notation:

1.0E-8 == 10.0E-9 == 0.01E-6 == 0.00000001 == 10ns == 0.01 microseconds

1.0E8 == 0.1E9 == 100.0E6 == 100,000,000 == 100MHz == 0.1 GHz

1.0/1.0GHz = 1ns clock period

Some graphics boards have large computing capacity and

some are releasing the specs so programmers can use the

computing capacity.

nVidia example 2007

512-core by 2011, more today

Programming 512 cores or more with CUDA or OpenCL is quite a challenge.

New languages are coming, not optimized yet.

Fortunately, CMSC 411 does not require VHDL for floating point,

just the ability to manually do floating point add, subtract,

multiply and divide. (Examples above and in class on board.)

A hint on normalization, using computer scientific notation:

1.0E-8 == 10.0E-9 == 0.01E-6 == 0.00000001 == 10ns == 0.01 microseconds

1.0E8 == 0.1E9 == 100.0E6 == 100,000,000 == 100MHz == 0.1 GHz

1.0/1.0GHz = 1ns clock period

Some graphics boards have large computing capacity and

some are releasing the specs so programmers can use the

computing capacity.

nVidia example 2007

512-core by 2011, more today

Programming 512 cores or more with CUDA or OpenCL is quite a challenge.

New languages are coming, not optimized yet.

Fortunately, CMSC 411 does not require VHDL for floating point,

just the ability to manually do floating point add, subtract,

multiply and divide. (Examples above and in class on board.)