Abstract

While recent advances in computer vision have provided reliable methods to recognize actions in both images and videos, the problem of assessing how well people perform actions has been largely unexplored in computer vision. Since methods for assessing action quality have many real-world applications in healthcare, sports, and video retrieval, we believe the computer vision community should begin to tackle this challenging problem. To spur progress, we introduce a learning-based framework that takes steps towards assessing how well people perform actions in videos. Our approach works by training a regression model from spatiotemporal pose features to scores obtained from expert judges. Moreover, our approach can provide interpretable feedback on how people can improve their action. We evaluate our method on a new Olympic sports dataset, and our experiments suggest our framework is able to rank the athletes more accurately than a non-expert human. While promising, our method is still a long way to rivaling the performance of expert judges, indicating that there is significant opportunity in computer vision research to improve on this difficult yet important task.

Introduction

Recent advances in computer vision have provided reliable methods for recognizing actions in videos and images. However, the problem of automatically quantifying how well people perform actions has been largely unexplored.

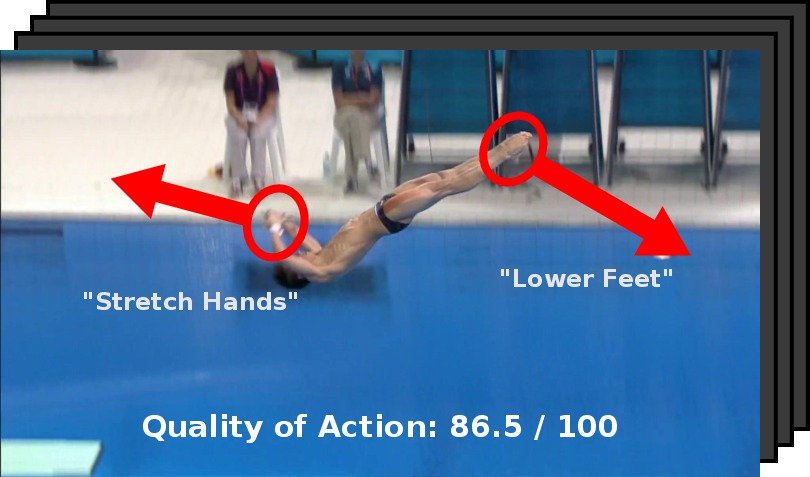

We introduce a learning framework for assessing the quality of human actions from videos. Since we estimate a model for what constitutes a high quality action, our method can also provide feedback on how people can improve their actions, visualized with the red arrows.

Check out our paper to learn more.

Examples

Dataset and code

In order to quantify the performance of our methods, we introduce a new dataset for action quality assessment comprised of Olympic sports footage.

Download the README (mirror1) [3MB].

Download the code (mirror1) [3MB].

Download the dataset (mirror1) [18GB].

Download the pre-computed and tracked human pose (mirror1) [200MB].

Download the frames for a sample diving instance (mirror1) [25MB].