Ph.D. Dissertation Defense

Clustering and Visualization Techniques

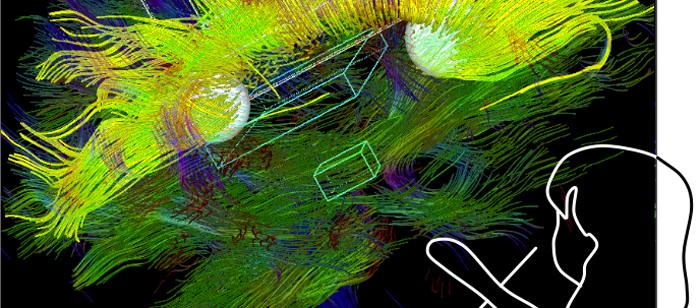

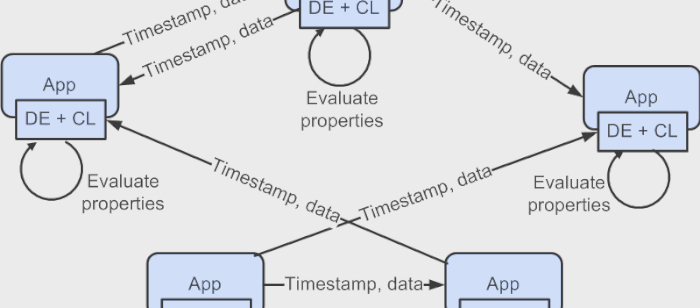

for Aggregate Trajectory Analysis

David Trimm

1:00pm Thursday 15 March 15th 2012, ITE 365

Analyzing large trajectory sets enables deeper insights into multiple real-world problems. For example, animal migration data, multi-agent analysis, and virtual entertainment can all benefit from deriving conclusions from large sets of trajectory data. However, the analysis is complicated by several factors when using traditional analytic techniques. For example, directly visualizing the trajectory set results in a multitude of lines that cannot be easily understood. Statistical analysis methods and non-direct visualization techniques (e.g., parallel coordinates) produce conclusions that are non-intuitive and difficult to understand. By using two complementary processes—clustering and visualization—a new approach is developed to analyzing large trajectory sets. First, clustering techniques are developed and refined to group related trajectories together. From these similar sets, a trajectory composition visualization is created and implemented that clearly depicts the cluster characteristics including application-specific attributes. The effectiveness of the approach is demonstrated on two separate and unique data sets resulting in actionable conclusions. The first application, multi-agent analysis, represents a rich, spatial data that, when analyzed using this approach, shows ways to improve the underlying artificial intelligence algorithms. Student course-grade history analysis, the second application, requires tailoring the approach for a non-spatial data set. However, the results enable a clear understanding of which courses are most critical in a student's career and which student groups require assistance to succeed. In summary, this research contributes to methods for trajectory clustering, techniques for large-scale visualization of trajectory data, and processes for analyzing student data.

Committee

- Dr. Penny Rheingans (chair)

- Dr. Marie desJardins

- Dr. Anupam Joshi

- Dr. Marc Olano

- Dr. Sreedevi Sampath