Ph.D. Dissertation Defense

Automatic Service Search and Composability

Analysis in Large Scale Service Networks

Yunsu Lee

10:00am Wednesday 25 November 2015, ITE 346, UMBC

Analysis in Large Scale Service Networks

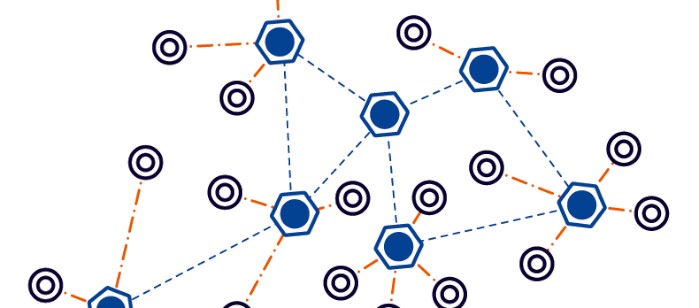

Currently, software and hardware system components are trending toward modularized and virtualized as atomic services on the cloud. A number of cloud platforms or marketplaces are available where everybody can provide their system components as services. In this situation, service composition is essential, because the functionalities offered by a single atomic service might not satisfy users’ complex requirements. Since there are already a number of available services and significant increase in the number of new services over time, manual service composition is impractical.

In our research, we propose computer-aided methods to help find and compose appropriate services to fulfill users’ requirement in large scale service network. For this purpose, we explore the following methods. First, we develop a method for formally representing a service in term of composability by considering various functional and non-functional characteristics of services. Second, we develop a method for aiding the development of the reference ontologies that are crucial for representing a service. We explore a bottom-up-based statistical method for the ontology development. Third, we architect a framework that encompasses the reference models, effective strategy, and necessary procedures for the services search and composition. Finally, we develop a graph-based algorithm that is highly specialized for services search and composition. Experimental comparative performance analysis against existing automatic services composition methods is also provided.

Commitee: Drs. Yun Peng (chair), Tim Finin, Yelena Yesha, Milton Halem, Nenad Ivezic (NIST) and Boonserm Kulvatunyou (NIST)