Note that this file is plain text and .gif files. The plain text spells out Greek letters because old browsers did not display them.

These are not intended to be complete lecture notes. Complicated figures or tables or formulas are included here in case they were not clear or not copied correctly in class. Information from the language definitions, automata definitions, computability definitions and class definitions is not duplicated here. Lecture numbers correspond to the syllabus numbering.

This course covers some of the fundamental beginnings and foundations of computers and software. From automata to Turing Machines and formal languages to our programming languages, an interesting progression. A fast summary of what will be covered in this course (part 1) For reference, skim through the automata definitions and language definitions and grammar definitions to get the terms that will be used in this course. Don't panic, this will be easy once it is explained. Syllabus provides topics, homework and exams. HW1 is assigned Oh! "Formal Language" vs "Natural Language" Boy hit ball. word word word punctuation tokens noun verb noun period parsed subject predicate object sentence semantic "Language" all legal sentences In this class language will be a set of strings from a given alphabet. All math operations on sets apply, examples: union, concatenation, intersection, cross product, etc. We will cover grammars that define languages. We will define machines called automata that accept languages.

A fast summary of what will be covered in this course (part 2) For reference, read through the computability definitions and complexity class definitions

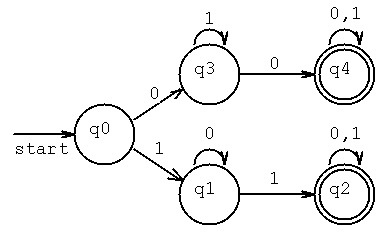

Example of a Deterministic Finite Automata, DFA

Machine Definition M = (Q, Sigma, delta, q0, F)

Q = { q0, q1, q2, q3, q4 } the set of states (finite)

Sigma = { 0, 1 } the input string alphabet (finite)

delta the state transition table - below

q0 = q0 the starting state

F = { q2, q4 } the set of final states (accepting

when in this state and no more input)

inputs

delta | 0 | 1 |

---------+---------+---------+

q0 | q3 | q1 |

q1 | q1 | q2 |

states q2 | q2 | q2 |

q3 | q4 | q3 |

q4 | q4 | q4 |

^ ^ ^

| | |

| +---------+-- every transition must have a state

+-- every state must be listed

An exactly equivalent diagram description for the machine M.

Each circle is a unique state. The machine is in exactly one state

and stays in that state until an input arrives. Connection lines

with arrows represent a state transition from the present state

to the next state for the input symbol(s) by the line.

L(M) is the notation for a Formal Language defined by a machine M.

Some of the shortest strings in L(M) = { 00, 11, 000, 001, 010, 101, 110,

111, 0000, 0001, 0010, 0011, 0100, 0101, 0110, 1001, ... }

The regular expression for this state diagram is

r = (0(1*)0(0+1)*)+(1(0*)1(0+1)*) using + for or, * for kleene star

1|--|

| V

//--\\

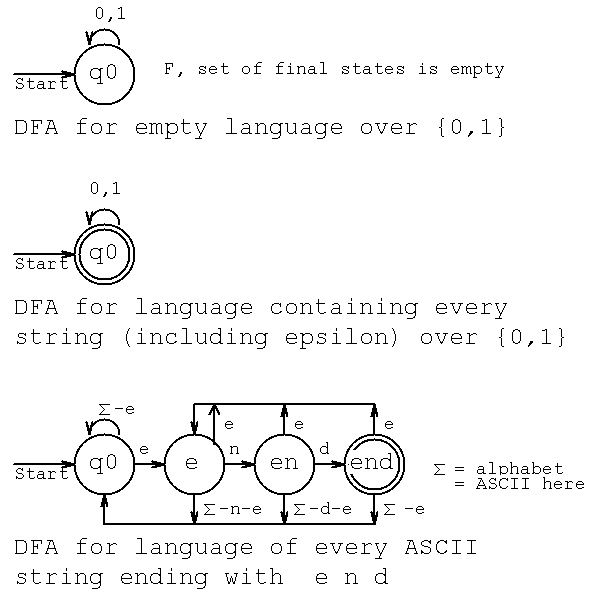

|| q3 || is a DFA with regular expression r = (1*)

\\--// zero or more sequence of 1 (infinite language)

0|--|

1| v

//--\\ is a DFA with regular expression r = (0+1)*

|| q4 || zero or more sequence of 0 or 1 in any order 00 01 10 11 etc

\\--// at end because final state (infinite language)

In words, L is the set of strings over { 0, 1} that contain at least

two 0's starting with 0, or that contain at least two 1's starting with 1.

Every input sequence goes through a sequence of states, for example

00 q0 q3 q4

11 q0 q1 q2

000 q0 q3 q4 q4

001 q0 q3 q4 q4

010 q0 q3 q3 q4

011 q0 q3 q3 q3

0110 q0 q3 q3 q3 q4

Rather abstract, there are tens of millions of DFA being used today.

practical example DFA

More information on DFA

More files, examples:

example data input file reg.dfa

example output from dfa.cpp, reg.out

under development dfa.java dfa.py

example output from dfa.java, reg_def_java.out

example output from dfa.py, labc_def_py.out

/--\ a /--\ b /--\ c //--\\

labc.dfa is -->| q0 |--->| q1 |--->| q2 |--->|| q3 || using just characters

\--/ \--/ \--/ \\--//

example data input file labc.dfa

example output from dfa.py, labc_def_py.out

For future homework, you can download programs and sample data from

cp /afs/umbc.edu/users/s/q/squire/pub/download/reg.dfa .

and other files

Definition of a Regular Expression

------------------

A regular expression may be the null string,

r = epsilon

A regular expression may be an element of the input alphabet, a in sigma,

r = a

A regular expression may be the union of two regular expressions,

r = r1 + r2 the plus sign is "or"

A regular expression may be the concatenation (no symbol) of two

regular expressions,

r = r1 r2

A regular expression may be the Kleene closure (star) of a

regular expression, may be null string or any number of concatenations of r1

r = r1* (the asterisk should be a superscript,

but this is plain text)

A regular expression may be a regular expression in parenthesis

r = (r1)

Nothing is a regular expression unless it is constructed with only the

rules given above.

The language represented or generated by a regular expression is a

Regular Language, denoted L(r).

The regular expression for the machine M above is

r = (1(0*)1(0+1)*)+(0(1*)0(0+1)*)

\ \ any number of zeros and ones

\ any number of zero

Later we will give an algorithm for generating a regular expression from

a machine definition. For simple DFA's, start with each accepting

state and work back to the start state writing the regular expression.

The union of these regular expressions is the regular expression for

the machine.

For every DFA there is a regular language and for every regular language

there is a regular expression. Thus a DFA can be converted to a

regular expression and a regular expression can be converted to a DFA.

Some examples, a regular language with only 0 in alphabet,

with strings of length 2*n+1 for n>0. Thus infinite language yet

DFA must have a finite number of states and finite number of

transitions to accept {000, 00000, 0000000, ...) 2*n+1 = 3,5,7,...

The DFA state diagram is

/--\ 0 /--\ 0 /--\ 0 //--\\ 0

| q0 |-->| q0 |-->| q0 |--->|| q4 ||--+

\--/ \--/ \--/ \\--// |

^ |

+---------------+

Check it out: 000 accepted, then 00000 two more 0 then accepted, ...

Now, an example of the complement of a regular expression is

a regular expression. First a DFA that accepts any string with 00

then a DFA that does not accept any string with 00, the complement.

|--|<-------+ |--------+ r = ((1)*0)*0(0+1)*

V |1 |1 V |

/--\ 0 /--\ 0 //--\\ |1 any 00

-| q0 |-->| q1 |-->|| q2 ||--+ (1)* can be empty string

\--/ \--/ \\--// |0

^ |

|--------+

+-----------------+

| /--\-+ 1 /--\ 0

| +-->| q1 |--->| q2 |----+ r = ((1*)+0(1*))*

V 0 | \--/ 0 \--/---+ |

//--\\---+ ^ ^ 1| | no 00

-|| q0 || | +---+ |

\\--//---+ +--------+

^ 1 | never leaves, never accepted

+----+

The general way to create a complement of a regular expression is to

create a new regular expression that has all of the first regular

expression go to phi and all other strings go to a final state.

Both regular expressions must have the same alphabet.

Given a DFA and one or more strings, determine if the string(s) are accepted by the DFA. This may be error prone and time consuming to do by hand. Fortunately, there is a program available to do this for you. On linux.gl.umbc.edu do the following: ln -s /afs/umbc.edu/users/s/q/squire/pub/dfa dfa cp /afs/umbc.edu/users/s/q/squire/pub/download/ab_b.dfa . dfa < ab_b.dfa # or dfa < ab_b.dfa > ab_a.out Full information is available at Simulators The source code for the family of simulators is available. HW2 is assigned last update 3/9/2021

Here, we start with the state diagram:

From this we see the states Q = { Off, Starting forward, Running forward, Starting reverse, Running reverse } The alphabet (inputs) Sigma = { off request, forward request, reverse request, time-out } The initial state would be Off. The final state would be Off. The state transition table, Delta, is obvious yet very wordy. Note: that upon entering a state, additional actions may be specified. Inputs could be from buttons, yet could come from a computer.

Important! nondeterministic has nothing to do with random,

nondeterministic implies parallelism.

The difference between a DFA and a NFA is that the state transition

table, delta, for a DFA has exactly one target state but for a NFA has

a set, possibly empty (phi), of target states.

A NFA state diagram may have more than one line to get

to some state.

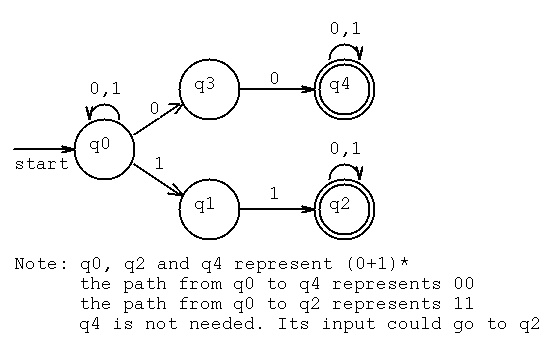

Example of a NFA, Nondeterministic Finite Automata given

by a) Machine Definition M = (Q, sigma, delta, q0, F)

b) Equivalent regular expression

c) Equivalent state transition diagram

and example tree of states for input string 0100011

and an equivalent DFA, Deterministic Finite Automata for the

first 3 states.

a) Machine Definition M = (Q, sigma, delta, q0, F)

Q = { q0, q1, q2, q3, q4 } the set of states

sigma = { 0, 1 } the input string alphabet

delta the state transition table

q0 = q0 the starting state

F = { q2, q4 } the set of final states (accepting when in

this state and no more input)

inputs

delta | 0 | 1 |

---------+---------+---------+

q0 | {q0,q3} | {q0,q1} |

q1 | phi | {q2} |

states q2 | {q2} | {q2} |

q3 | {q4} | phi |

q4 | {q4} | {q4} |

^ ^ ^

| | |

| +---------+-- a set of states, phi means empty set

+-- every state must be listed

b) The equivalent regular expression is (0+1)*(00+11)(0+1)*

This NFA represents the language L = all strings over {0,1} that

have at least two consecutive 0's or 1's.

c) Equivalent NFA state transition diagram.

Note that state q3 does not go anywhere for an input of 1.

We use the terminology that the path "dies" if in q3 getting an input 1.

The tree of states this NFA is in for the input 0100011

input

q0

/ \ 0

q3 q0

dies / \ 1

q1 q0

dies / \ 0

q3 q0

/ / \ 0

q4 q3 q0

/ / / \ 0

q4 q4 q3 q0

/ / dies / \ 1

q4 q4 q1 q0

/ / / / \ 1

q4 q4 q2 q1 q0

^ ^ ^

| | |

accepting paths in NFA tree

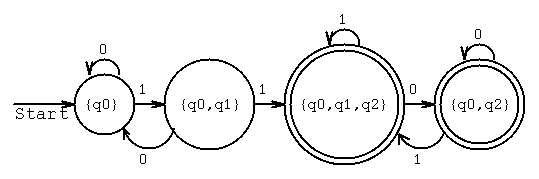

Construct a DFA equivalent to the NFA above using just the first

three rows of delta (for brevity, consider q3 and q4 do not exists).

The DFA machine is M' = (Q', sigma, delta', q0', F')

The set of states is

Q' = 2**Q, the power set of Q = { phi, {q0}, {q1}, {q2}, {q0,q1}, {q0,q2},

{q1,q2}, {q0,q1,q2} }

Note the big expansion: If a set has n items, the power set has 2^n items.

Note: read the eight elements of the set Q' as names of states of M'

OK to use [ ] in place of { } if you prefer.

sigma is the same sigma = { 0, 1}

The state transition table delta' is given below

The starting state is set containing only q0 q0' = {q0}

The set of final states is a set of sets that contain q2

F' = { {q2}, {q0,q2}, {q1,q2}, {q0,q1,q2} }

Algorithm for building delta' from delta:

The delta' is constructed directly from delta.

Using the notation f'({q0},0) = f(q0,0) to mean:

in delta' in state {q0} with input 0 goes to the state

shown in delta with input 0. Take the union of all such states.

Further notation, phi is the empty set so phi union the set A is

just the set A.

Some samples: f'({q0,q1},0) = f(q0,0) union f(q1,0) = {q0}

f'({q0,q1},1) = f(q0,1) union f(q1,1) = {q0,q1,q2}

f'({q0,q2},0) = f(q0,0) union f(q2,0) = {q0,q2}

f'({q0,q2},1) = f(q0,1) union f(q2,1) = {q0,q1,q2}

sigma

delta | 0 | 1 | simplified for this construction

---------+---------+---------+

q0 | {q0} | {q0,q1} |

states q1 | phi | {q2} |

q2 | {q2} | {q2} |

sigma

delta' | 0 | 1 |

----------+-------------+-------------+

phi | phi | phi | never reached

{q0} | {q0} | {q0,q1} |

states {q1} | phi | {q2} | never reached

Q' {q2} | {q2} | {q2} | never reached

{q0,q1} | {q0} | {q0,q1,q2} |

{q0,q2} | {q0,q2} | {q0,q1,q2} |

{q1,q2} | {q2} | {q2} | never reached

{q0,q1,q2} | {q0,q2} | {q0,q1,q2} |

Note: Some of the states in the DFA may be unreachable yet

must be specified. Later we will use Myhill minimization.

DFA (not minimized) equivalent to lower branch of NFA above.

The sequence of states is unique for a DFA, so for the same input as

above 0100011 the sequence of states is

{q0} 0 {q0} 1 {q0,q1} 0 {q0} 0 {q0} 0 {q0} 1 {q0,q1}

1 {q0,q1,q2}

This sequence does not have any states involving q3 or q4 because just

a part of the above NFA was converted to a DFA. This DFA does not

accept the string 00 whereas the NFA above does accept 00.

Given a NFA and one or more strings, determine if the string(s)

are accepted by the NFA. This may be error prone and time

consuming to do by hand. Fortunately, there is a program

available to do this for you.

On linux.gl.umbc.edu do the following:

ln -s /afs/umbc.edu/users/s/q/squire/pub/nfa nfa

cp /afs/umbc.edu/users/s/q/squire/pub/download/fig2_7.nfa .

nfa < fig2_7.nfa # or nfa < fig2_7.nfa > fig2_7.out

NFA coded for simulation

You can code a Machine Definition into a data file for simulation

Q = { q0, q1, q2, q3, q4 }

sigma = { 0, 1 } // fig2_7.nfa

delta

q0 = q0 start q0

F = { q2, q4 } final q2

final q4

sigma

delta | 0 | 1 | q0 0 q0

---------+---------+---------+ q0 0 q3

q0 | {q0,q3} | {q0,q1} | q0 1 q0

q1 | phi | {q2} | q0 1 q1

states q2 | {q2} | {q2} | q1 1 q2

q3 | {q4} | phi | q2 0 q2

q4 | {q4} | {q4} | q2 1 q2

q3 0 q4

q4 0 q4

q4 1 q4

enddef

tape 10101010 // reject

tape 10110101 // accept

tape 10100101 // accept

tape 01010101 // reject

Full information is available at Simulators

The source code for the family of simulators is available.

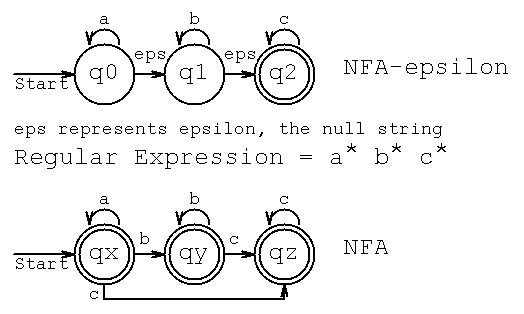

Definition and example of a NFA with epsilon transitions.

Remember, epsilon is the zero length string, so it can be any where

in the input string, front, back, between any symbols.

There is a conversion algorithm from a NFA with epsilon transitions to

a NFA without epsilon transitions.

Consider the NFA-epsilon move machine M = { Q, sigma, delta, q0, F}

Q = { q0, q1, q2 }

sigma = { a, b, c } and epsilon moves

q0 = q0

F = { q2 }

sigma plus epsilon

delta | a | b | c |epsilon

------+------+------+------+-------

q0 | {q0} | phi | phi | {q1}

------+------+------+------+-------

q1 | phi | {q1} | phi | {q2}

------+------+------+------+-------

q2 | phi | phi | {q2} | {q2}

------+------+------+------+-------

The language accepted by the above NFA with epsilon moves is

the set of strings over {a,b,c} including the null string and

all strings with any number of a's followed by any number of b's

followed by any number of c's. ("any number" includes zero)

Now convert the NFA with epsilon moves to a NFA

M = ( Q', sigma, delta', q0', F')

First determine the states of the new machine, Q' = the epsilon closure

of the states in the NFA with epsilon moves. There will be the same

number of states but the names can be constructed by writing the state

name as the set of states in the epsilon closure. The epsilon closure

is the initial state and all states that can be reached directly

by one or more epsilon moves.

Thus q0 in the NFA-epsilon becomes {q0,q1,q2} because the machine can

move from q0 to q1 by an epsilon move, then check q1 and find that

it can move from q1 to q2 by an epsilon move.

q1 in the NFA-epsilon becomes {q1,q2} because the machine can move from

q1 to q2 by an epsilon move.

q2 in the NFA-epsilon becomes {q2} just to keep the notation the same. q2

can go nowhere except q2, that is what phi means, on an epsilon move.

We do not show the epsilon transition of a state to itself here, but,

beware, we will take into account the state to itself epsilon transition

when converting NFA's to regular expressions.

The initial state of our new machine is {q0,q1,q2} the epsilon closure

of q0

The final state(s) of our new machine is the new state(s) that contain

a state symbol that was a final state in the original machine.

The new machine accepts the same language as the old machine,

thus same sigma.

So far we have for out new NFA

Q' = { {q0,q1,q2}, {q1,q2}, {q2} } or renamed { qx, qy, qz }

sigma = { a, b, c }

F' = { {q0,q1,q2}, {q1,q2}, {q2} } or renamed { qx, qy, qz }

q0 = {q0,q1,q2} or renamed qx

inputs

delta' | a | b | c

------------+--------------+--------------+--------------

qx or {q0,q1,q2} | | |

------------+--------------+--------------+--------------

qy or {q1,q2} | | |

------------+--------------+--------------+--------------

qz or {q2} | | |

------------+--------------+--------------+--------------

Now we fill in the transitions. Remember that a NFA has transition

entries that are sets. Further, the names in the transition entry

sets must be only the state names from Q'.

Very carefully consider each old machine transitions in the first row.

You can ignore any "phi" entries and ignore the "epsilon" column.

In the old machine delta(q0,a)=q0 thus in the new machine

delta'({q0,q1,q2},a)={q0,q1,q2} this is just because the new machine

accepts the same language as the old machine and must at least have the

the same transitions for the new state names.

inputs

delta' | a | b | c

------------+--------------+--------------+--------------

qx or {q0,q1,q2} | {{q0,q1,q2}} | |

------------+--------------+--------------+--------------

qy or {q1,q2} | | |

------------+--------------+--------------+--------------

qz or {q2} | | |

------------+--------------+--------------+--------------

No more entries go under input a in the first row because

old delta(q1,a)=phi, delta(q2,a)=phi

inputs

delta' | a | b | c

------------+--------------+--------------+--------------

qx or {q0,q1,q2} | {{q0,q1,q2}} | |

------------+--------------+--------------+--------------

qy or {q1,q2} | phi | |

------------+--------------+--------------+--------------

qz or {q2} | phi | |

------------+--------------+--------------+--------------

Now consider the input b in the first row, delta(q0,b)=phi,

delta(q1,b)={q2} and delta(q2,b)=phi. The reason we considered

q0, q1 and q2 in the old machine was because out new state

has symbols q0, q1 and q2 in the new state name from the

epsilon closure. Since q1 is in {q0,q1,q2} and

delta(q1,b)=q1 then delta'({q0,q1,q2},b)={q1,q2}. WHY {q1,q2} ?,

because {q1,q2} is the new machines name for the old machines

name q1. Just compare the zeroth column of delta to delta'. So we have

inputs

delta' | a | b | c

------------+--------------+--------------+--------------

qx or {q0,q1,q2} | {{q0,q1,q2}} | {{q1,q2}} |

------------+--------------+--------------+--------------

qy or {q1,q2} | phi | |

------------+--------------+--------------+--------------

qz or {q2} | phi | |

------------+--------------+--------------+--------------

Now, because our new qx state has a symbol q2 in its name and

delta(q2,c)=q2 is in the old machine, the new name for the old q2,

which is qz or {q2} is put into the input c transition in row 1.

inputs

delta' | a | b | c

------------+--------------+--------------+--------------

qx or {q0,q1,q2} | {{q0,q1,q2}} | {{q1,q2}} | {{q2}} or qz

------------+--------------+--------------+--------------

qy or {q1,q2} | phi | |

------------+--------------+--------------+--------------

qz or {q2} | phi | |

------------+--------------+--------------+--------------

Now, tediously, move on to row two ..., column b ...

You are considering all transitions in the old machine, delta,

for all old machine state symbols in the name of the new machines states.

Fine the old machine state that results from an input and translate

the old machine state to the corresponding new machine state name and

put the new machine state name in the set in delta'. Below are the

"long new state names" and the renamed state names in delta'.

inputs

delta' | a | b | c

------------+--------------+--------------+--------------

qx or {q0,q1,q2} | {{q0,q1,q2}} | {{q1,q2}} | {{q2}} or {qz}

------------+--------------+--------------+--------------

qy or {q1,q2} | phi | {{q1,q2}} | {{q2}} or {qz}

------------+--------------+--------------+--------------

qz or {q2} | phi | phi | {{q2}} or {qz}

------------+--------------+--------------+--------------

inputs

delta' | a | b | c <-- input alphabet sigma

---+------+------+-----

/ qx | {qx} | {qy} | {qz}

/ ---+------+------+-----

Q' qy | phi | {qy} | {qz}

\ ---+------+------+-----

\ qz | phi | phi | {qz}

---+------+------+-----

The figure above labeled NFA shows this state transition table.

It seems rather trivial to add the column for epsilon transitions,

but we will make good use of this in converting regular expressions

to machines. regular-expression -> NFA-epsilon -> NFA -> DFA.

HW3 is assigned

Given a regular expression, r , there is an associated regular language L(r).

Since there is a finite automata for every regular language,

there is a machine, M, for every regular expression such that L(M) = L(r).

The constructive proof provides an algorithm for constructing a machine, M,

from a regular expression r. The six constructions below correspond to

the cases:

1) The entire regular expression is the null string, i.e. L={epsilon}

(Any regular expression that includes epsilon will have the

starting state as a final state.)

r = epsilon

2) The entire regular expression is empty, i.e. L=phi r = phi

3) An element of the input alphabet, sigma, is in the regular expression

r = a where a is an element of sigma.

4) Two regular expressions are joined by the union operator, +

r1 + r2

5) Two regular expressions are joined by concatenation (no symbol)

r1 r2

6) A regular expression has the Kleene closure (star) applied to it

r*

The construction proceeds by using 1) or 2) if either applies.

The construction first converts all symbols in the regular expression

using construction 3).

Then working from inside outward, left to right at the same scope,

apply the one construction that applies from 4) 5) or 6).

Example: Convert (00 + 1)* 1 (0 +1) to a NFA-epsilon machine.

Optimization hint: We use a simple form of epsilon-closure to combine

any state that has only an epsilon transition to another state, into

one state.

chose first 0 to get

Example: Convert (00 + 1)* 1 (0 +1) to a NFA-epsilon machine.

Optimization hint: We use a simple form of epsilon-closure to combine

any state that has only an epsilon transition to another state, into

one state.

chose first 0 to get

chose next 0, and concatenate

chose next 0, and concatenate

use epsilon-closure to combine states

use epsilon-closure to combine states

Chose 1, then added union

Chose 1, then added union

Apply Kleene star

Apply Kleene star

Chose 1, concatenate, combine states

Chose 1, concatenate, combine states

Concatenate (0+1), combine one state

Concatenate (0+1), combine one state

The result is a NFA with epsilon moves. This NFA can then be converted

to a NFA without epsilon moves. Further conversion can be performed to

get a DFA. All these machines have the same language as the

regular expression from which they were constructed.

The construction covers all possible cases that can occur in any

regular expression. Because of the generality there are many more

states generated than are necessary. The unnecessary states are

joined by epsilon transitions. Very careful compression may be

performed. For example, the fragment regular expression aba would be

a e b e a

q0 ---> q1 ---> q2 ---> q3 ---> q4 ---> q5

with e used for epsilon, this can be trivially reduced to

a b a

q0 ---> q1 ---> q2 ---> q3

A careful reduction of unnecessary states requires use of the

Myhill-Nerode Theorem of section 3.4 in 1st Ed. or section 4.4 in 2nd Ed.

This will provide a DFA that has the minimum number of states.

Within a renaming of the states and reordering of the delta, state

transition table, all minimum machines of a DFA are identical.

Conversion of a NFA to a regular expression was started in this

lecture and finished in the next lecture. The notes are in lecture 7.

Example: r = (0+1)* (00+11) (0+1)*

Solution: find the primary operator(s) that are concatenation or union.

In this case, the two outermost are concatenation, giving, crudely:

//---------------\ /----------------\\ /-----------------\

-->|| <> M((0+1)*) <> |->| <> M((00+11)) <> ||->| <> M((0+1)*) <<>> |

\\---------------/ \----------------// \-----------------/

There is exactly one start "-->" and exactly one final state "<<>>"

The unlabeled arrows should be labeled with epsilon.

Now recursively decompose each internal regular expression.

The result is a NFA with epsilon moves. This NFA can then be converted

to a NFA without epsilon moves. Further conversion can be performed to

get a DFA. All these machines have the same language as the

regular expression from which they were constructed.

The construction covers all possible cases that can occur in any

regular expression. Because of the generality there are many more

states generated than are necessary. The unnecessary states are

joined by epsilon transitions. Very careful compression may be

performed. For example, the fragment regular expression aba would be

a e b e a

q0 ---> q1 ---> q2 ---> q3 ---> q4 ---> q5

with e used for epsilon, this can be trivially reduced to

a b a

q0 ---> q1 ---> q2 ---> q3

A careful reduction of unnecessary states requires use of the

Myhill-Nerode Theorem of section 3.4 in 1st Ed. or section 4.4 in 2nd Ed.

This will provide a DFA that has the minimum number of states.

Within a renaming of the states and reordering of the delta, state

transition table, all minimum machines of a DFA are identical.

Conversion of a NFA to a regular expression was started in this

lecture and finished in the next lecture. The notes are in lecture 7.

Example: r = (0+1)* (00+11) (0+1)*

Solution: find the primary operator(s) that are concatenation or union.

In this case, the two outermost are concatenation, giving, crudely:

//---------------\ /----------------\\ /-----------------\

-->|| <> M((0+1)*) <> |->| <> M((00+11)) <> ||->| <> M((0+1)*) <<>> |

\\---------------/ \----------------// \-----------------/

There is exactly one start "-->" and exactly one final state "<<>>"

The unlabeled arrows should be labeled with epsilon.

Now recursively decompose each internal regular expression.

Conversion algorithm from a NFA to a regular expression.

Start with the transition table for the NFA with the following

state naming conventions:

the first state is 1 or q1 or s1 which is the starting state.

states are numbered consecutively, 1, 2, 3, ... n

The transition table is a typical NFA where the table entries

are sets of states and phi the empty set is allowed.

The set F of final states must be known.

We call the variable r a regular expression.

It can be: epsilon, an element of the input sigma, r*, r1+r2, or r1 r2

We use r being the regular expression to go from state qi to qj

ij

We need multiple steps from state qi to qj and use a superscript

to show the number of steps

1 2 k k-1

r r r r are just names of different regular expressions

12 34 1k ij

2

We are going to build a table with n rows and n+1 columns labeled

| k=0 | k=1 | k=2 | ... | k=n

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | | n

r | r | r | r | ... | r Only build column n

11 | 11 | 11 | 11 | | 11 for q1 to final state

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | | n The final regular expression

r | r | r | r | ... | r is then the union, +, of

12 | 12 | 12 | 12 | | 12 the column n

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | | n

r | r | r | r | ... | r

13 | 13 | 13 | 13 | | 13

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | |

r | r | r | r | ... |

21 | 21 | 21 | 21 | |

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | |

r | r | r | r | ... |

22 | 22 | 22 | 22 | |

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | |

r | r | r | r | ... |

23 | 23 | 23 | 23 | |

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | |

r | r | r | r | ... |

31 | 31 | 31 | 31 | |

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | |

r | r | r | r | ... |

32 | 32 | 32 | 32 | |

----+--------+-------+-------+-----+------

| 0 | 1 | 2 | |

r | r | r | r | ... |

33 | 33 | 33 | 33 | |

^ 2

|- Note n rows, all pairs of numbers from 1 to n

Now, build the table entries for the k=0 column:

/

0 / +{ x | delta(q ,x) = q } i /= j

r = / i j

ij \

\ +{ x | delta(q ,x) = q } + epsilon i = j

\ i j

where delta is the transition table function,

x is some symbol from sigma

the q's are states

0

r could be phi, epsilon, a, 0+1, or a+b+d+epsilon

ij

notice there are no Kleene Star or concatenation in this column

Next, build the k=1 column:

1 0 0 * 0 0

r = r ( r ) r + r note: all items are from the previous column

ij i1 11 1j ij

Next, build the k=2 column:

2 1 1 * 1 1

r = r ( r ) r + r note: all items are from the previous column

ij i2 22 2j ij

Then, build the rest of the k=k columns:

k k-1 k-1 * k-1 k-1

r = r ( r ) r + r note: all items are from previous column

ij ik kk kj ij

Finally, for final states p, q, r the regular expression is

n n n

r + r + r

1p 1q 1r

Note that this is from a constructive proof that every NFA has a language

for which there is a corresponding regular expression.

Some minimization rules for regular expressions are available.

These can be applied at every step.

(Actually, extremely long equations, if not applied at every step.)

Note: phi is the empty set

epsilon is the zero length string

0, 1, a, b, c, are symbols in sigma

x is a variable or regular expression

( ... )( ... ) is concatenation

( ... ) + ( ... ) is union

( ... )* is the Kleene Closure = Kleene Star

(phi)(x) = (x)(phi) = phi

(epsilon)(x) = (x)(epsilon) = x

(phi) + (x) = (x) + (phi) = x

x + x = x

(epsilon)* = (epsilon)(epsilon) = epsilon

(x)* + (epsilon) = (x)* = x*

(x + epsilon)* = x*

x* (a+b) + (a+b) = x* (a+b)

x* y + y = x* y

(x + epsilon)x* = x* (x + epsilon) = x*

(x+epsilon)(x+epsilon)* (x+epsilon) = x*

Now for an example:

Given M=(Q, sigma, delta, q0, F) as

delta | a | b | c Q = { q1, q2}

--------+------+------+----- sigma = { a, b, c }

q1 | {q2} | {q2} | {q1} q0 = q1

--------+------+------+----- F = { q2}

q2 | phi | phi | phi

--------+------+------+-----

Informal method, draw state diagram:

/--\ a //--\\ a,b,c

-->| q1 |---->|| q2 ||-------->phi

\--/ b \\--//

^ |

| |c guess r = c* (a+b)

+--+ any number of c followed by a or b

phi stops looking at input

Formal method:

Remember r for k=0, means the regular expression directly from q1 to q1

11

| k=0

-----+-------------

r | c + epsilon from q1 input c goes to q1 (auto add epsilon

11 | when a state goes to itself)

-----+-------------

r | a + b from state q1 to q2, either input a or input b

12 |

-----+-------------

r | phi phi means no transition

21 |

-----+-------------

r | epsilon (auto add epsilon, a state can stay in that state)

22 |

-----+-------------

| k=0 | k=1 (building on k=0, using e for epsilon)

-----+-------------+------------------------------------

r | c + epsilon | (c+e)(c+e)* (c+e) + (c+e) = c*

11 | |

-----+-------------+------------------------------------

r | a + b | (c+e)(c+e)* (a+b) + (a+b) = c* (a+b)

12 | |

-----+-------------+------------------------------------

r | phi | phi (c+e)* (c+e) + phi = phi

21 | |

-----+-------------+------------------------------------

r | epsilon | phi (c+e)* (a+b) + e = e

22 | |

-----+-------------+------------------------------------

| k=0 | k=1 | k=2 (building on k=1,using minimized)

-----+-------------+----------+-------------------------

r | c + epsilon | c* |

11 | | |

-----+-------------+----------+-------------------------

r | a + b | c* (a+b) | c* (a+b)(e)* (e) + c* (a+b) only final

12 | | | state

-----+-------------+----------+-------------------------

r | phi | phi |

21 | | |

-----+-------------+----------+-------------------------

r | epsilon | e |

22 | | |

-----+-------------+----------+-------------------------

the final regular expression minimizes to r = c* (a+b)

Additional topics include Moore Machines and

Mealy Machines

HW4 is assigned

Review of Basics of Proofs.

The Pumping Lemma is generally used to prove a language is not regular.

If a DFA, NFA or NFA-epsilon machine can be constructed to exactly accept

a language, then the language is a Regular Language.

If a regular expression can be constructed to exactly generate the

strings in a language, then the language is regular.

If a regular grammar can be constructed to exactly generate the

strings in a language, then the language is regular.

To prove a language is not regular requires a specific definition of

the language and the use of the Pumping Lemma for Regular Languages.

A note about proofs using the Pumping Lemma:

Given: Formal statements A and B.

A implies B.

If you can prove B is false, then you have proved A is false.

For the Pumping Lemma, the statement "A" is "L is a Regular Language",

The statement "B" is a statement from the Predicate Calculus.

(This is a plain text file that uses words for the upside down A that

reads 'for all' and the backwards E that reads 'there exists')

Formal statement of the Pumping Lemma:

L is a Regular Language implies

(there exists n)(for all z)[z in L and |z|>=n implies

{(there exists u,v,w)(z = uvw and |uv|<=n and |v|>=1 and

i

(for all i>=0)(uv w is in L) )}]

The two commonest ways to use the Pumping Lemma to prove a language

is NOT regular are:

a) show that there is no possible n for the (there exists n),

this is usually accomplished by showing a contradiction such

as (n+1)(n+1) < n*n+n

b) show there is a way to partition z into u, v and w such that

i

uv w is not in L, typically by pumping a value i=0, i=1, or i=2.

i

Stop as soon as one uv w is not in the language,

thus, you have proved the language is not regular.

Note: The pumping lemma only applies to languages (sets of strings)

with infinite cardinality. A DFA can be constructed for any

finite set of strings. Use the regular expression to NFA 'union'

construction.

n n

Notation: the string having n a's followed by n b's is a b

which is reduced to one line by writing a^n b^n

Languages that are not regular:

L = { a^n b^n n>0 }

L = { a^f1(n) b^f2(n) n<c } for any non degenerate f1, f2.

L = { a^f(n) n>0 } for any function f(n)< k*n+c for all constants k and c

L = { a^(n*n) n>0 } also applies to n a prime(n log n), 2^n, n!

L = { a^n b^k n>0 k>n } can not save count of a's to check b's k>n

L = { a^n b^k+n n>0 k>1 } same language as above

Languages that are regular:

L = { a^n n>=0 } this is just r = a*

L = { a^n b^k n>0 k>0 } no relation between n and k, r = a a* b b*

L = { a^(37*n+511) n>0 } 511 states in series, 37 states in loop

You may be proving a lemma, a theorem, a corollary, etc

A proof is based on:

definition(s)

axioms

postulates

rules of inference (typical normal logic and mathematics)

To be accepted as "true" or "valid"

Recognized people in the field need to agree your

definitions are reasonable

axioms, postulates, ... are reasonable

rules of inference are reasonable and correctly applied

"True" and "Valid" are human intuitive judgments but can be

based on solid reasoning as presented in a proof.

Types of proofs include:

Direct proof (typical in Euclidean plane geometry proofs)

Write down line by line provable statements,

(e.g. definition, axiom, statement that follows from applying

the axiom to the definition, statement that follows from

applying a rule of inference from prior lines, etc.)

Proof by contradiction:

Given definitions, axioms, rules of inference

Assume Statement_A

use proof technique to derive a contradiction

(e.g. prove not Statement_A or prove Statement_B = not Statement_B,

like 1 = 2 or n > 2n)

Proof by induction (on Natural numbers)

Given a statement based on, say n, where n ranges over natural numbers

Prove the statement for n=0 or n=1

a) Prove the statement for n+1 assuming the statement true for n

b) Prove the statement for n+1 assuming the statement true for n in 1..n

Prove two sets A and B are equal, prove part 1, A is a subset of B

prove part 2, B is a subset of A

Prove two machines M1 and M2 are equal,

prove part 1 that machine M1 can simulate machine M2

prove part 2 that machine M2 can simulate machine M1

Limits on proofs:

Godel incompleteness theorem:

a) Any formal system with enough power to handle arithmetic will

have true theorems that are unprovable in the formal system.

(He proved it with Turing machines.)

b) Adding axioms to the system in order to be able to prove all the

"true" (valid) theorems will make the system "inconsistent."

Inconsistent means a theorem can be proved that is not accepted

as "true" (valid).

c) Technically, any formal system with enough power to do arithmetic

is either incomplete or inconsistent.

For reference, read through the automata definitions and

language definitions.

A class of languages is simply a set of languages with some

property that determines if a language is in the class (set).

The class of languages called regular languages is the set of all

languages that are regular. Remember, a language is regular if it is

accepted by some DFA, NFA, NFA-epsilon, regular expression or

regular grammar.

A single language is a set of strings over a finite alphabet and

is therefor countable. A regular language may have an infinite

number of strings. The strings of a regular language can be enumerated,

written down for length 0, length 1, length 2 and so forth.

But, the class of regular languages is the size of a power set

of any given regular language. This comes from the fact that

regular languages are closed under union. From mathematics we

know a power set of a countable set is not countable. A set that

is not countable, like the real numbers, can not be enumerated.

A class of languages is closed under some operation, op, when for any

two languages in the class, say L1 and L2, L1 op L2 is in the class.

This is the definition of "closed."

Summary. Regular languages are closed under operations: concatenation,

union, intersection, complementation, difference, reversal,

Kleene star, substitution, homomorphism and

any finite combination of these operations.

All of these operations are "effective" because there is an

algorithm to construct the resulting language.

Simple constructions include complementation by interchanging final

states and non final states in a DFA. Concatenation, union and Kleene

star are constructed using the corresponding regular expression to

NFA technique. Intersection is constructed using DeMorgan's theorem

and difference is constructed using L - M = L intersect complement M.

Reversal is constructed from a DFA using final states as starting states

and the starting state as the final state, reversing the direction

of all transition arcs.

We have seen that regular languages are closed under union because

the "+" is regular expressions yields a union operator for regular

languages. Similarly, for DFA machines, L(M1) union L(M2) is

L(M1 union M2) by the construction in lecture 6.

Thus we know the set of regular languages is closed under union.

In symbolic terms, L1 and L2 regular languages and L3 = L1 union L2

implies L3 is a regular language.

The complement of a language is defined in terms of set difference

__

from sigma star. L1 = sigma * - L1. The language L1 bar, written

as L1 with a bar over it, has all strings from sigma star except

the strings in L1. Sigma star is all possible strings over the

alphabet sigma. It turns out a DFA for a language can be made a

DFA for the complement language by changing all final states to

not final states and visa versa. (Warning! This is not true for NFA's)

Thus regular languages are closed under complementation.

Given union and complementation, by DeMorgan's Theorem,

L1 and L2 regular languages and L3 = L1 intersect L2 implies

______________

__ __

L3 is a regular language. L3 = ( L1 union L2) .

The construction of a DFA, M3, such that L(M3) = L(M1) intersect L(M2)

is given by:

Let M1 = (Q1, sigma, delta1, q1, F1)

Let M2 = (Q2, sigma, delta2, q2, F2)

Let S1 x S2 mean the cross product of sets S1 and S2, all ordered pairs,

Then

M3 = (Q1 x Q2, sigma, delta3, [q1,q2], F1 x F2)

where [q1,q2] is an ordered pair from Q1 x Q2,

delta3 is constructed from

delta3([x1,x2],a) = [delta1(x1,a), delta2(x2,a)] for all a in sigma

and all [x1,x2] in Q1 x Q2.

We choose to say the same alphabet is used in both machines, but this

works in general by picking the alphabet of M3 to be the intersection

of the alphabets of M1 and m2 and using all 'a' from this set.

Regular set properties:

One way to show that an operation on two regular languages produces a

regular language is to construct a machine that performs the operation.

Remember, the machines have to be DFA, NFA or NFA-epsilon type machines

because these machines have corresponding regular languages.

Consider two machines M1 and M2 for languages L1(M1) and L2(M2).

To show that L1 intersect L2 = L3 and L3 is a regular language we

construct a machine M3 and show by induction that M3 only accepts

strings that are in both L1 and L2.

M1 = (Q1, Sigma, delta1, q1, F1) with the usual definitions

M2 = (Q2, Sigma, delta2, q2, F2) with the usual definitions

Now construct M3 = (Q3, Sigma, delta3, q3, F3) defined as

Q3 = Q1 x Q2 set cross product

q3 = [q1,q2] q3 is an element of Q3, the notation means an ordered pair

Sigma = Sigma = Sigma we choose to use the same alphabet,

else some fix up is required

F3 = F1 x F2 set cross product

delta3 is constructed from

delta3([qi,qj],x) = [delta1(qi,x),delta2(qj,x)]

as you might expect, this is most easily performed on a DFA

The language L3(M3) is shown to be the intersection of L1(M1) and L2(M2)

by induction on the length of the input string.

For example:

M1: Q1 = {q0, q1}, Sigma = {a, b}, delta1, q1=q0, F1 = {q1}

M2: Q2 = {q3, q4, q5}, Sigma = {a, b}, delta2, q2=q3, F2 = {q4,q5}

delta1 | a | b | delta2 | a | b |

---+----+----+ -----+----+----+

q0 | q0 | q1 | q3 | q3 | q4 |

---+----+----+ -----+----+----+

q1 | q1 | q1 | q4 | q5 | q3 |

---+----+----+ -----+----+----+

q5 | q5 | q5 |

-----+----+----+

M3 now is constructed as

Q3 = Q1 x Q2 = {[q0,q3], [q0,q4], [q0,q5], [q1,q3], [q1,q4], [q1,q5]}

F3 = F1 x F2 = {[q1,q4], [q1,q5]}

initial state q3 = [q0,q3]

Sigma = technically Sigma_1 intersect Sigma_2

delta3 is constructed from delta3([qi,qj],x) = [delta1(qi,x),delta2(qj,x)]

delta3 | a | b |

---------+---------+---------+

[q0,q3] | [q0,q3] | [q1,q4] | this is a DFA when both

---------+---------+---------+ M1 and M2 are DFA's

[q0,q4] | [q0,q5] | [q1,q3] |

---------+---------+---------+

[q0,q5] | [q0,q5] | [q1,q5] |

---------+---------+---------+

[q1,q3] | [q1,q3] | [q1,q4] |

---------+---------+---------+

[q1,q4] | [q1,q5] | [q1,q3] |

---------+---------+---------+

[q1,q5] | [q1,q5] | [q1,q5] |

---------+---------+---------+

As we have seen before there may be unreachable states.

In this example [q0,q4] and [q0,q5] are unreachable.

It is possible for the intersection of L1 and L2 to be empty,

thus a final state will never be reachable.

Coming soon, the Myhill-Nerode theorem and minimization to

eliminate useless states.

Some sample DFA coded and run with dfa.cpp

n000.dfa complement of (0+1)*000(0+1)*

n000_dfa.out no 000 accepted

n2p1.dfa 2n+1 zeros n>0

n000_dfa.out 3, 5, 7 accepted

Decision algorithms for regular sets:

Remember: An algorithm must always terminate to be called an algorithm!

Basically, an algorithm needs to have four properties

1) It must be written as a finite number of unambiguous steps

2) For every possible input, only a finite number of steps

will be performed, and the algorithm will produce a result

3) The same, correct, result will be produced for the same input

4) Each step must have properties 1) 2) and 3)

Remember: A regular language is just a set of strings over a finite alphabet.

Every regular set can be represented by an regular expression

and by a minimized finite automata, a DFA.

We choose to use DFA's, represented by the usual M=(Q, Sigma, delta, q0, F)

There are countably many DFA's yet every DFA we look at has a finite

description. We write down the set of states Q, the alphabet Sigma,

the transition table delta, the initial state q0 and the final states F.

Thus we can analyze every DFA and even simulate them.

Theorem: The regular set accepted by DFA's with n states:

1) the set is non empty if and only if the DFA accepts at least one string

of length less than n. Just try less than |Sigma|^|Q| strings (finite)

2) the set is infinite if and only if the DFA accepts at least one string

of length k, n <= k < 2n. By pumping lemma, just more to try (finite)

Rather obviously the algorithm proceeds by trying the null string first.

The null string is either accepted or rejected in a finite time.

Then try all strings of length 1, i.e. each character in Sigma.

Then try all strings of length 2, 3, ... , k. Every try results in

an accept or reject in a finite time.

Thus we say there is an algorithm to decide if any regular set

represented by a DFA is a) empty, b) finite and c) infinite.

The practical application is painful! e.g. Given a regular expression,

convert it to a NFA, convert NFA to DFA, use Myhill-Nerode to get

a minimized DFA. Now we know the number of states, n, and the alphabet

Sigma. Now run the tests given in the Theorem above.

An example of a program that has not been proven to terminate is

terminates.c with output terminates.out

We will cover a program, called the halting problem, that no Turing

machine can determine if it will terminate.

Review for the quiz. (See homework WEB page.)

Open book. Open note, multiple choice in cs451q1.doc

Covers lectures and homework.

cp /afs/umbc.edu/users/s/q/squire/pub/download/cs451q1.doc . # your directory

libreoffice cs451q1.doc

or scp, winscp, to windows and use Microsoft word, winscp back to GL

save

exit

submit cs451 q1 cs451q1.doc

just view cs451q1.doc

See details on lectures, homework, automata and

formal languages using links below.

Open book so be sure you check on definitions and converting

from one definition (or abbreviation) to another.

Regular machine may be a DFA, NFA, NFA-epsilon

DFA deterministic finite automata

processes an input string (tape) and accepts or rejects

M = {Q, sigma, delta, q1, F}

Q set of states

sigma alphabet, list symbols 0,1 a,b,c etc

delta transition table

| 0 | 1 | etc

+----+----+

q1 | q2 | q3 | etc

+----+----+

q1 name of starting state

F list of names of final states

NFA nondeterministic finite automata

M = {Q, sigma, delta, q1, F}

delta transition table

| 0 | 1 | etc

+----+---------+

{q1,q3} | q2 | {q4,q5} | etc

+----+---------+

NFA nondeterministic finite automata with epsilon

M = {Q, sigma, delta, q1, F}

delta transition table

| 0 | 1 |epsilon| etc

+----+---------+-------+

{q1,q3} | q2 | {q4,q5} | q3 | etc

+----+---------+-------+

regular expression (defines strings accepted by DFA,NFA,NFA epsilon)

r1 = epsilon (computer input #e)

r2 = 0

r3 = ab

r4 = (a+b)c(a+b)

r5 = 1(0+1)*0(1*)0

regular language, a set of strings defined by a regular expression

from r1 {epsilon}

from r2 {0}

from r3 {ab}

from r4 {aca, acb, bca, bcb} finite

from r5 {epsilon,100,110110, ... } infinite

Myhill-Nerode theorem and minimization to eliminate useless states.

The Myhill-Nerode Theorem says the following three statements are equivalent:

1) The set L, a subset of Sigma star, is accepted by a DFA.

(We know this means L is a regular language.)

2) L is the union of some of the equivalence classes of a right

invariant(with respect to concatenation) equivalence relation

of finite index.

3) Let equivalence relation RL be defined by: xRLy if and only if

for all z in Sigma star, xz is in L exactly when yz is in L.

Then RL is of finite index.

The notation RL means an equivalence relation R over the language L.

The notation RM means an equivalence relation R over a machine M.

We know for every regular language L there is a machine M that

exactly accepts the strings in L.

Think of an equivalence relation as being true or false for a

specific pair of strings x and y. Thus xRy is true for some

set of pairs x and y. We will use a relation R such that

xRy <=> yRx x has a relation to y if and only if y has

the same relation to x. This is known as symmetric.

xRy and yRz implies xRz. This is known as transitive.

xRx is true. This is known as reflexive.

Our RL is defined xRLy <=> for all z in Sigma star (xz in L <=> yz in L)

Our RM is defined xRMy <=> xzRMyz for all z in Sigma star.

In other words delta(q0,xz) = delta(delta(q0,x),z)=

delta(delta(q0,y),z) = delta(q0,yz)

for x, y and z strings in Sigma star.

RM divides the set Sigma star into equivalence classes, one class for

each state reachable in M from the starting state q0. To get RL from

this we have to consider only the Final reachable states of M.

From this theorem comes the provable statement that there is a

smallest, fewest number of states, DFA for every regular language.

The labeling of the states is not important, thus the machines

are the same within an isomorphism. (iso=constant, morph=change)

Now for the algorithm that takes a DFA, we know how to reduce a NFA

or NFA-epsilon to a DFA, and produces a minimum state DFA.

-3) Start with a machine M = (Q, Sigma, delta, q0, F) as usual

-2) Remove from Q, F and delete all states that can not be reached from q0.

Remember a DFA is a directed graph with states as nodes. Thus use

a depth first search to mark all the reachable states. The unreachable

states, if any, are then eliminated and the algorithm proceeds.

-1) Build a two dimensional matrix labeling the right side q0, q1, ...

running down and denote this as the "p" first subscript.

Label the top as q0, q1, ... and denote this as the "q" second subscript

0) Put dashes in the major diagonal and the lower triangular part

of the matrix (everything below the diagonal). we will always use the

upper triangular part because xRMy = yRMx is symmetric. We will also

use (p,q) to index into the matrix with the subscript of the state

called "p" always less than the subscript of the state called "q".

We can have one of three things in a matrix location where there is

no dash. An X indicates a distinct state from our initialization

in step 1). A link indicates a list of matrix locations

(pi,qj), (pk,ql), ... that will get an x if this matrix location

ever gets an x. At the end, we will label all empty matrix locations

with a O. (Like tic-tac-toe) The "O" locations mean the p and q are

equivalent and will be the same state in the minimum machine.

(This is like {p,q} when we converted a NFA to a DFA. and is

the transitive closure just like in NFA to DFA.)

NOW FINALLY WE ARE READY for 1st Ed. Page 70, Figure 3.8 or

2nd Ed. Page 159.

1) For p in F and q in Q-F put an "X" in the matrix at (p,q)

This is the initialization step. Do not write over dashes.

These matrix locations will never change. An X or x at (p,q)

in the matrix means states p and q are distinct in the minimum

machine. If (p,q) has a dash, put the X in (q,p)

2) BIG LOOP TO END

For every pair of distinct states (p,q) in F X F do 3) through 7)

and for every pair of distinct states (p,q)

in (Q-F) x (Q-F) do 3) through 7)

(Actually we will always have the index of p < index of q and

p never equals q so we have fewer checks to make.)

3) 4) 5)

If for any input symbol 'a', (r,s) has an X or x then put an x at (p,q)

Check (s,r) if (r,s) has a dash. r=delta(p,a) and s=delta(q,a)

Also, if a list exists for (p,q) then mark all (pi,qj) in the list

with an x. Do it for (qj,pi) if (pi,qj) has a dash. You do not have

to write another x if one is there already.

6) 7)

If the (r,s) matrix location does not have an X or x, start a list

or add to the list (r,s). Of course, do not do this if r = s,

of if (r,s) is already on the list. Change (r,s) to (s,r) if

the subscript of the state r is larger than the subscript of the state s

END BIG LOOP

Now for an example, non trivial, where there is a reduction.

M = (Q, Sigma, delta, q0, F} and we have run a depth first search to

eliminate states from Q, F and delta that can not be reached from q0.

Q = {q0, q1, q2, q3, q4, q5, q6, q7, q8}

Sigma = {a, b}

q0 = q0

F = {q2, q3, q5, q6}

delta | a | b | note Q-F = {q0, q1, q4, q7, q8}

----+----+----+

q0 | q1 | q4 | We use an ordered F x F = {(q2, q3),

----+----+----+ (q2, q5),

q1 | q2 | q3 | (q2, q6),

----+----+----+ (q3, q5),

q2 | q7 | q8 | (q3, q6),

----+----+----+ (q5, q6)}

q3 | q8 | q7 |

----+----+----+ We use an ordered (Q-F) x (Q-F) = {(q0, q1),

q4 | q5 | q6 | (q0, q4),

----+----+----+ (q0, q7),

q5 | q7 | q8 | (q0, q8),

----+----+----+ (q1, q4),

q6 | q7 | q8 | (q1, q7),

----+----+----+ (q1, q8),

q7 | q7 | q7 | (q4, q7),

----+----+----+ (q4, q8),

q8 | q8 | q8 | (q7, q8)}

----+----+----+

Now, build the matrix labeling the "p" rows q0, q1, ...

and labeling the "q" columns q0, q1, ...

and put in dashes on the diagonal and below the diagonal

q0 q1 q2 q3 q4 q5 q6 q7 q8

+---+---+---+---+---+---+---+---+---+

q0 | - | | | | | | | | |

+---+---+---+---+---+---+---+---+---+

q1 | - | - | | | | | | | |

+---+---+---+---+---+---+---+---+---+

q2 | - | - | - | | | | | | |

+---+---+---+---+---+---+---+---+---+

q3 | - | - | - | - | | | | | |

+---+---+---+---+---+---+---+---+---+

q4 | - | - | - | - | - | | | | |

+---+---+---+---+---+---+---+---+---+

q5 | - | - | - | - | - | - | | | |

+---+---+---+---+---+---+---+---+---+

q6 | - | - | - | - | - | - | - | | |

+---+---+---+---+---+---+---+---+---+

q7 | - | - | - | - | - | - | - | - | |

+---+---+---+---+---+---+---+---+---+

q8 | - | - | - | - | - | - | - | - | - |

+---+---+---+---+---+---+---+---+---+

Now fill in for step 1) (p,q) such that p in F and q in (Q-F)

{ (q2, q0), (q2, q1), (q2, q4), (q2, q7), (q2, q8),

(q3, q0), (q3, q1), (q3, q4), (q3, q7), (q3, q8),

(q5, q0), (q5, q1), (q5, q4), (q5, q7), (q5, q8),

(q6, q0), (q6, q1), (q6, q4), (q6, q7), (q6, q8)}

q0 q1 q2 q3 q4 q5 q6 q7 q8

+---+---+---+---+---+---+---+---+---+

q0 | - | | X | X | | X | X | | |

+---+---+---+---+---+---+---+---+---+

q1 | - | - | X | X | | X | X | | |

+---+---+---+---+---+---+---+---+---+

q2 | - | - | - | | X | | | X | X |

+---+---+---+---+---+---+---+---+---+

q3 | - | - | - | - | X | | | X | X |

+---+---+---+---+---+---+---+---+---+

q4 | - | - | - | - | - | X | X | | |

+---+---+---+---+---+---+---+---+---+

q5 | - | - | - | - | - | - | | X | X |

+---+---+---+---+---+---+---+---+---+

q6 | - | - | - | - | - | - | - | X | X |

+---+---+---+---+---+---+---+---+---+

q7 | - | - | - | - | - | - | - | - | |

+---+---+---+---+---+---+---+---+---+

q8 | - | - | - | - | - | - | - | - | - |

+---+---+---+---+---+---+---+---+---+

Now fill in more x's by checking all the cases in step 2)

and apply steps 3) 4) 5) 6) and 7). Finish by filling in blank

matrix locations with "O".

For example (r,s) = (delta(p=q0,a), delta(q=q1,a)) so r=q1 and s= q2

Note that (q1,q2) has an X, thus (q0, q1) gets an "x"

Another from F x F (r,s) = (delta(p=q4,b), delta(q=q5,b)) so r=q6 and s=q8

thus since (q6, q8) has an X then (p,q) = (q4,q5) gets an "x"

It depends on the order of the choice of (p, q) in step 2) whether

a (p, q) gets added to a list in a cell or gets an "x".

Another (r,s) = (delta(p,a), delta(q,a)) where p=q0 and q=q8 then

s = delta(q0,a) = q1 and r = delta(q8,a) = q8 but (q1, q8) is blank.

Thus start a list in (q1, q8) and put (q0, q8) in this list.

[ This is what 7) says: put (p, q) on the list for (delta(p,a), delta(q,a))

and for our case the variable "a" happens to be the symbol "a".]

Eventually (q1, q8) will get an "x" and the list, including (q0, q8)

will get an "x'.

Performing the tedious task results in the matrix:

q0 q1 q2 q3 q4 q5 q6 q7 q8

+---+---+---+---+---+---+---+---+---+

q0 | - | x | X | X | x | X | X | x | x |

+---+---+---+---+---+---+---+---+---+

q1 | - | - | X | X | O | X | X | x | x | The "O" at (q1, q4) means {q1, q4}

+---+---+---+---+---+---+---+---+---+ is a state in the minimum machine

q2 | - | - | - | O | X | O | O | X | X |

+---+---+---+---+---+---+---+---+---+

q3 | - | - | - | - | X | O | O | X | X | The "O" for (q2, q3), (q2, q5) and

+---+---+---+---+---+---+---+---+---+ (q2, q6) means they are one state

q4 | - | - | - | - | - | X | X | x | x | {q2, q3, q5, q6} in the minimum

+---+---+---+---+---+---+---+---+---+ machine. many other "O" just

q5 | - | - | - | - | - | - | O | X | X | confirm this.

+---+---+---+---+---+---+---+---+---+

q6 | - | - | - | - | - | - | - | X | X |

+---+---+---+---+---+---+---+---+---+

q7 | - | - | - | - | - | - | - | - | O | The "O" in (q7, q8) means {q7, q8}

+---+---+---+---+---+---+---+---+---+ is one state in the minimum machine

q8 | - | - | - | - | - | - | - | - | - |

+---+---+---+---+---+---+---+---+---+

The resulting minimum machine is M' = (Q', Sigma, delta', q0', F')

with Q' = { {q0}, {q1,q4}, {q2,q3,q5,q6}, {q7,q8} } four states

F' = { {q2,q3,q5,q6} } only one final state

q0' = q0

delta' | a | b |

----------+----------------+------------------+

{q0} | {q1,q4} | {q1,q4} |

----------+----------------+------------------+

{q1,q4} | {q2,q3,q5,q6} | {q2,q3,q5,q6} |

----------+----------------+------------------+

{q2,q3,q5,q6} | {q7,q8} | {q7,q8} |

----------+----------------+------------------+

{q7,q8} | {q7,q8} | {q7,q8} |

----------+----------------+------------------+

Note: Fill in the first column of states first. Check that every

state occurs in some set and in only one set. Since this is a DFA

the next columns must use exactly the state names found in the

first column. e.g. q0 with input "a" goes to q1, but q1 is now {q1,q4}

Use the same technique as was used to convert a NFA to a DFA, but

in this case the result is always a DFA even though the states have

the strange looking names that appear to be sets, but are just

names of the states in the DFA.

It is possible for the entire matrix to be "X" or "x" at the end.

In this case the DFA started with the minimum number of states.

At the heart of the algorithm is the following:

The sets Q-F and F are disjoint, thus the pairs of states (Q-F) X (F)

are distinguishable, marked X. For the pairs of states (p,q) and (r,s)

where r=delta(p,a) and s=delta(q,a) if p is distinguishable from q,

then r is distinguishable from s, thus mark (r,s) with an x.

If you do not wish to do minimizations by hand,

on linux.gl.umbc.edu use

ln -s /afs/umbc.edu/users/s/q/squire/pub/myhill myhill

myhill < your.dfa # result is in myhill_temp.dfa

or get the C++ source code from

/afs/umbc.edu/users/s/q/squire/pub/download/myhill.cpp

Instructions to compile are comments in source code

instruction on use are here

Grammars that have the same languages as DFA's

A grammar is defined as G = (V, T, P, S) where

V is a set of variables. We usually use capital letters for variables.

T is a set of terminal symbols. This is the same as Sigma for a machine.

P is a list of productions (rules) of the form:

variable -> concatenation of variables and terminals

S is the starting variable. S is in V.

A string z is accepted by a grammar G if some sequence of rules from P

can be applied to z with a result that is exactly the variable S.

We say that L(G) is the language generated (accepted) by the grammar G.

To start, we restrict the productions P to be of the form

A -> w w is a concatenation of terminal symbols

B -> wC w is a concatenation of terminal symbols

A, B and C are variables in V

and thus get a grammar that generates (accepts) a regular language.

Suppose we are given a machine M = (Q, Sigma, delta, q0, F) with

Q = { S }

Sigma = { 0, 1 }

q0 = S

F = { S }

delta | 0 | 1 |

---+---+---+

S | S | S |

---+---+---+

this looks strange because we would normally use q0 is place of S

The regular expression for M is (0+1)*

We can write the corresponding grammar for this machine as

G = (V, T, P, S) where

V = { S } the set of states in the machine

T = { 0, 1 } same as Sigma for the machine

P =

S -> epsilon | 0S | 1S

S = S the q0 state from the machine

the construction of the rules for P is directly from M's delta

If delta has an entry from state S with input symbol 0 go to state S,

the rule is S -> 0S.

If delta has an entry from state S with input symbol 1 go to state S,

the rule is S -> 1S.

There is a rule generated for every entry in delta.

delta(qi,a) = qj yields a rule qi -> a qj

An additional rule is generated for each final state, i.e. S -> epsilon

(An optional encoding is to generate an extra rule for every transition

to a final state: delta(qi,a) = any final state, qi -> a

with this option, if the start state is a final state, the production

S -> epsilon is still required. )

See g_reg.g file for worked example.

See g_reg.out for simulation check.

The shorthand notation S -> epsilon | 0S | 1S is the same as writing

the three rules. Read "|" as "or".

Grammars can be more powerful (read accept a larger class of languages)

than finite state machines (DFA's NFA's NFA-epsilon regular expressions).

i i

For example the language L = { 0 1 | i=0, 1, 2, ... } is not a regular

language. Yet, this language has a simple grammar

S -> epsilon | 0S1

Note that this grammar violates the restriction needed to make the grammars

language a regular language, i.e. rules can only have terminal symbols

and then one variable. This rule has a terminal after the variable.

A grammar for matching parenthesis might be

G = (V, T, P, S)

V = { S }

T = { ( , ) }

P = S -> epsilon | (S) | SS

S = S

We can check this be rewriting an input string

( ( ( ) ( ) ( ( ) ) ) )

( ( ( ) ( ) ( S ) ) ) S -> (S) where the inside S is epsilon

( ( ( ) ( ) S ) ) S -> (S)

( ( ( ) S S ) ) S -> (S) where the inside S is epsilon

( ( ( ) S ) ) S -> SS

( ( S S ) ) S -> (S) where the inside S is epsilon

( ( S ) ) S -> SS

( S ) S -> (S)

S S -> (S)

Thus the string ((()()(()))) is accepted by G because the rewriting

produced exactly S, the start variable.

More examples of constructing grammars from language descriptions:

Construct a CFG for non empty Palindromes over T = { 0, 1 }

The strings in this language read the same forward and backward.

G = ( V, T, P, S) T = { 0, 1 }, V = S, S = S, P is below:

S -> 0 | 1 | 00 | 11 | 0S0 | 1S1

We started the construction with S -> 0 and S -> 1

the shortest strings in the language.

S -> 0S0 is a palindrome with a zero added to either end

S -> 1S1 is a palindrome with a one added to either end

But, we needed S -> 00 and S -> 11 to get the even length

palindromes started.

"Non empty" means there can be no rule S -> epsilon.

n n

Construct the grammar for the language L = { a b n>0 }

G = ( V, T, P, S ) T = { a, b } V = { S } S = S P is:

S -> ab | aSb

Because n>0 there can be no S -> epsilon

The shortest string in the language is ab

a's have to be on the front, b's have to be on the back.

When either an "a" or a "b" is added the other must be added

in order to keep the count the same. Thus S -> aSb.

The toughest decision is when to stop adding rules.

In this case start "generating" strings in the language

S -> ab ab for n=1

S -> aSb aabb for n=2

S -> aaSbb aaabbb for n=3 etc.

Thus, no more rules needed.

"Generating" the strings in a language defined by a grammar

is also called "derivation" of the strings in a language.

Homework 6 is assigned

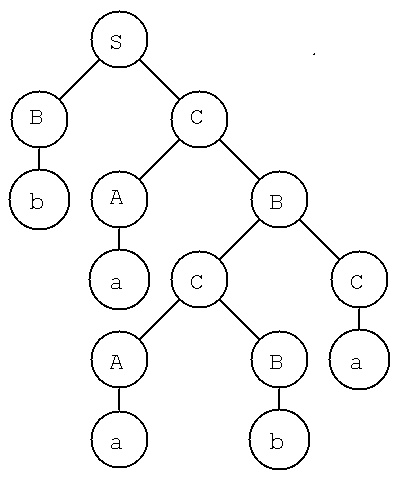

More theory, Context Free Grammar, see below what was a

state in a DFA is a variable, in a grammer, no states.

Given a grammar with the usual representation G = (V, T, P, S)

with variables V, terminal symbols T, set of productions P and

the start symbol from V called S.

Productions are variable, one or more variable, often | used

for more productions, rather than on seperate lines.

S -> aab

T -> aac | aad | epsilon

A derivation tree is constructed with

1) each tree vertex is a variable or terminal or epsilon

2) the root vertex is S

3) interior vertices are from V, leaf vertices are from T or epsilon

4) an interior vertex A has children, in order, left to right,

X1, X2, ... , Xk when there is a production in P of the

form A -> X1 X2 ... Xk

5) a leaf can be epsilon only when there is a production A -> epsilon

and the leafs parent can have only this child.

Watch out! A grammar may have an unbounded number of derivation trees.

It just depends on which production is expanded at each vertex.

For any valid derivation tree, reading the leafs from left to right

gives one string in the language defined by the grammar.

There may be many derivation trees for a single string in the language.

If the grammar is a CFG then a leftmost derivation tree exists

for every string in the corresponding CFL. There may be more than one

leftmost derivation trees for some string. See example below and

((()())()) example in previous lecture.

If the grammar is a CFG then a rightmost derivation tree exists

for every string in the corresponding CFL. There may be more than one

rightmost derivation tree for some string.

The grammar is called "ambiguous" if the leftmost (rightmost) derivation

tree is not unique for every string in the language defined by the grammar.

The leftmost and rightmost derivations are usually distinct but might

be the same.

Given a grammar and a string in the language represented by the grammar,

a leftmost derivation tree is constructed bottom up by finding a

production in the grammar that has the leftmost character of the string

(possibly more than one may have to be tried) and building the tree

towards the root. Then work on the second character of the string.

After much trial and error, you should get a derivation tree with a root S.

We will get to the CYK algorithm that does the parsing in a few lectures.

Examples: Construct a grammar for L = { x 0^n y 1^n z n>0 }

Recognize that 0^n y 1^n is a base language, say B

B -> y | 0B1 (The base y, the recursion 0B1 )

Then, the language is completed S -> xBz

using the prefix, base language and suffix.

(Note that x, y and z could be any strings not involving n)

G = ( V, T, P, S ) where

V = { B, S } T = { x, y, z, 0, 1 } S = S

P = S -> xBz

B -> y | 0B1

*

Now construct an arbitrary derivation for S => x00y11z

G

A derivation always starts with the start variable, S.

The "=>", "*" and "G" stand for "derivation", "any number of

steps", and "over the grammar G" respectively.

The intermediate terms, called sentential form, may contain

variable and terminal symbols.

Any variable, say B, can be replaced by the right side of any

production of the form B -> <right side>

A leftmost derivation always replaces the leftmost variable

in the sentential form. (In general there are many possible

replacements, the process is nondeterministic.)

One possible derivation using the grammar above is

S => xBz => x0B1z => x00B11z => x00y11z

The derivation must obviously stop when the sentential form

has only terminal symbols. (No more substitutions possible.)

The final string is in the language of the grammar. But, this

is a very poor way to generate all strings in the grammar!

A "derivation tree" sometimes called a "parse tree" uses the

rules above: start with the starting symbol, expand the tree

by creating branches using any right side of a starting

symbol rule, etc.

S

/ | \

/ | \

/ | \

/ | \

/ | \

x B z

/ | \

/ | \

/ | \

/ | \

0 B 1

/ | \

/ | \

0 B 1

|

y

Derivation ends x 0 0 y 1 1 z with all leaves

terminal symbols, a string in the language generated by the grammar.

More examples of grammars are:

G(L) for L = { x a^n y b^k z k > n > 0 }

note that there must be more b's than a's thus

B -> aybb | aBb | Bb

G = ( V, T, P, S ) where

V = { B, S } T = { a, b, x, y, z } S = S

P = S -> xBz B -> aybb | aBb | Bb

Incremental changes for "n > k > 0" B -> aayb | aBb | aB

Incremental changes for "n >= k >= 0" B -> y | aBb | aB

Independent exponents do not cause a problem when nested

equivalent to nesting parenthesis.

G(L) for L = { a^i b^j c^j d^i e^k f^k i>=0, j>=0, k>=0 }

| | | | | |

| +---+ | +---+

+-----------+

G = ( V, T , P, S )

V = { I, J, K, S } T = { a, b, c, d, e, f } S = S

P = S -> IK

I -> J | aId

J -> epsilon | bJc

K -> epsilon | eKf

G(L) for L = { a^i b^j c^k | any unbounded relation such as i=j=k>0, 0<i<k<j }

the G(L) can not be a context free grammar. Try it.

This will be intuitively seen in the push down automata and provable

with the pumping lemma for context free languages.

What is a leftmost derivation trees for some string?

It is a process that looks at the string left to right and

runs the productions backwards.

Here is an example, time starts at top and moves down.

Given G = (V, T, P, S) V={S, E, I} T={a, b, c} S=S P=

I -> a | b | c

E -> I | E+E | E*E

S -> E (a subset of grammar from book)

Given a string a + b * c

I

E

S derived but not used

I

E

[E + E]

E

S derived but not used

I

E

[E * E]

E

S done! Have S and no more input.

Left derivation tree, just turn upside down, delet unused.

S

|

E

/ | \

/ | \

/ | \

E * E

/ | \ |

E + E I

| | |

I I c

| |

a b

Check: Read leafs left to right, must be initial string, all in T.

Interior nodes must be variables, all in V.

Every vertical connection must be tracable to a production.

The goal here is to take an arbitrary Context Free Grammar

G = (V, T, P, S) and perform transformations on the grammar that

preserve the language generated by the grammar but reach a

specific format for the productions.

Overview: Step 1a) Eliminate useless variables that can not become terminals

Step 1b) Eliminate useless variables that can not be reached

Step 2) Eliminate epsilon productions

Step 3) Eliminate unit productions

Step 4) Make productions Chomsky Normal Form

Step 5) Make productions Greibach Normal Form

The CYK parsing uses Chomsky Normal Form as input

The CFG to NPDA uses Greibach Normal Form as input

Details: one step at a time

1a) Eliminate useless variables that can not become terminals

See 1st Ed. book p88, Lemma 4.1, figure 4.7

2nd Ed. section 7.1

Basically: Build the set NEWV from productions of the form

V -> w where V is a variable and w is one or more terminals.

Insert V into the set NEWV.

Then iterate over the productions, now accepting any variable

in w as a terminal if it is in NEWV. Thus NEWV is all the

variables that can be reduced to all terminals.

Now, all productions containing a variable not in NEWV

can be thrown away. Thus T is unchanged, S is unchanged,

V=NEWV and P may become the same or smaller.

The new grammar G=(V,T,P,S) represents the same language.

1b) Eliminate useless variables that can not be reached from S

See 1st Ed. book p89, Lemma 4.2, 2nd Ed. book 7.1.

Set V'=S, T'=phi, mark all production as unused.

Iterate repeatedly through all productions until no change

in V' or T'. For any production A -> w, with A in V'

insert the terminals from w into the set T' and insert

the variables form w into the set V' and mark the

production as used.

Now, delete all productions from P that are marked unused.

V=V', T=T', S is unchanged.

The new grammar G=(V,T,P,S) represents the same language.

2) Eliminate epsilon productions.

See 1st Ed. book p90, Theorem 4.3, 2nd Ed. book 7.1

This is complex. If the language of the grammar contains