About Me

|

I am a Computer Science Masters student in the Computer Science & Electrical Engineering dept. at the University of Maryland, Baltimore County, and a member of the VANGOGH computer graphics research lab. My research interests include most areas of computer graphics, focusing on graphics-for-games, real-time rendering, and graphics hardware. My advisor is

Dr. Marc Olano.

For the past two years I have had the opportunity to work at Firaxis Games under the direction of Dan Baker. Working at Firaxis has allowed me to develop an understanding of the unique requirements placed upon graphics techniques adopted by the game industry, and complements my more theoretical experience at UMBC.

This website shows some of the graphics projects which I have worked on and feel worthy of public exhibition.

|

|

A copy of my CV is available here in PDF format.

|

Research

|

The following is a list of my current and past research projects

|

|

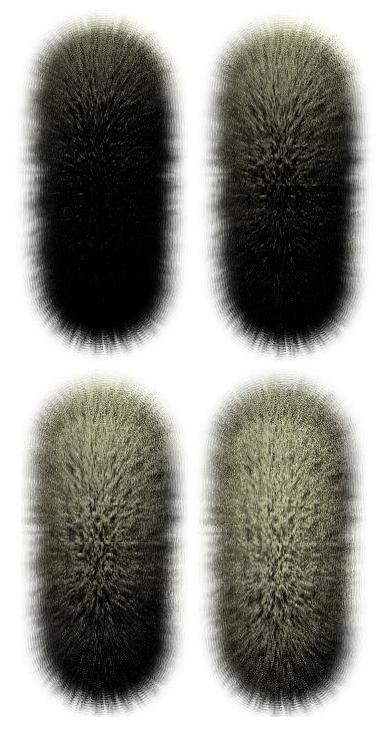

Interactive Volume Isosurface Rendering Using BT Volumes

|

|

Description:

This paper presents a volume representation format called BT Volumes, along with a technique to interactively render them and two methods to create useful data in BT Volume format, including high quality reconstruction filtering. Medical applications rely heavily on isosurface data to visualize anatomy, but current real-time isosurface rendering techniques such as Marching Cubes are limited in flexibility and provide only low-order linear reconstruction filtering. As an alternative to creating triangular geometry to represent the surface, we ray trace an exact isosurface directlyinside a pixel shader. We construct a set of Bezier Tetrahedra to approximate any reconstruction filter with arbitrary footprint. We then precompute the volume convolved with this filter as a tetrahedral grid with Bezier weights that can be ray traced in graphics hardware. Our technique is fast, renders any isosurface level without additional work, and performs high quality reconstruction filtering with arbitrary footprints and reconstruction kernels.

Full Paper(PDF)

Video(WMV) of the technique working.

Published: In Proceedings of the 2008 Symposium on interactive 3D Graphics and Games (Redwood City, California, February 15 - 17, 2008). SI3D '08. ACM, New York, NY, 45-52.

|

|

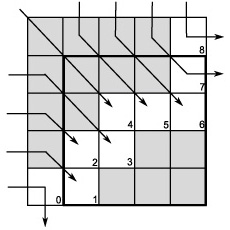

Leveraging Graphics Hardware to Accelerate Dynamic Programming

|

|

Description:

We present a new framework for solving dynamic programming problems on widely available parallel graphics processing hardware. Our method performs dynamic programming at three distinct levels of parallelism, each mapped to a specific layer of hardware and optimized for the memory structure of each layer. We perform linear-space processing at the highest level of the dynamic programming, a quadratic-space algorithm at the middle layer, and a different linear space algorithm on the final layer. The combination of all three running on a single CPU and Graphics Processing Unit (GPU) is a fast, flexible, scalable solution to dynamic programming problems belonging to the Gaussian Elimination Paradigm. An implementation of our method shows that performance gains of up to nine times can be expected with our method as opposed to the fastest single-processor solution available. We present theoretical and empirical analysis of our method along with a detailed description of our implementation and justification for our design.

Published: In Proceedings of EGPGV 2008: the 8th Eurographics Symposium on Parallel Graphics and Visualization, (Crete, Greece, April 14-15, 2008).

|

Projects

|

Below are listed computer graphics projects which I have worked on for classes.

|

|

CMSC691G: Graphics for Games - Final Project

|

|

Date:Fall semester, 2007

Project Type: Class Project for Graphics for Games (CMSC691G)

Project Time: Last half of semester.

Description:

The requirements for this project were to develop an interactive application, preferably a game, which exhibited some technically interesting feature. Other than these two things, we were allowed and encouraged to be creative and choose our own project.

Our group, which consisted of me, Jonathan Decker, and Brian Strege, decided to create a top-down scrolling shooter similar to Raptor or Tyrian. We had three of the required innovative features for the project: 1) Procedural cloud rendering 2) Random fractal terrain and 3) physics-based gameplay. The gameplay revolves around the player attempting to complete each stage of the game within a certain fixed time. Trying to stop the player are generic "enemy" ships which are equiped with a grappling hook which is capable of latching onto the player and adding extra drag to slow the player down.

You can download a Windows executable of our final project here.(~2MB - Requires DirectX 9.0) Feel free to e-mail me feedback at jk3@umbc.edu.

Status: Completed

|

|

CMSC691G: Graphics for Games - Assignment 2

|

|

Date:Fall semester, 2007

Project Type: Class Project for Graphics for Games (CMSC691G)

Project Time: Ten days.

Description:

For this project we were required to write shaders in the language of our choice to re-create a physical object handed out in class. The object I received was a $5 gaming token from Mirage casino, Las Vegas. Graduate students in the class were required to add an additional "otherworldly, pulsing glow" to the object, with a focus on making the glow somehow interesting.

The albedo texture I created in GIMP is responsible for the Mirage logo, the overall color of the chip and the center light-colored ring. Instead of using texture gutters I stored a mask in the alpha channel of the texture representing whether that pixel is actually within the texture boundaries of the model. Then, when I load the texture in the shader, I divide by the alpha term. This has the effect of removing the contribution of any pixels which should not contribute. (this effect can be seen in the screen shots) The stripes were created procedurally in the shader by masking the 3D coordinates via a series of step() calls. The position was modified by a 3D perlin noise texture created at run time to create the uneven walls.

Diffuse lighting was performed by projecting the cubemap into 3rd order spherical harmonics (using the DirectX Extensions) and passing the coefficients into the shader. The shader then evaluates the SH function in the direction of the normal and uses the resulting value as the diffuse lighting term. I think it looks pretty good.

I created a normal map for the "MIRAGE" lettering on the sides using a normal map plugin for GIMP. In order for this to work properly, you need to have a tangent frame which aligns to the texture coordinates, which (fortunately) is exactly the frame which the DirectX Extension generates, based upon the normal exported from Blender. Since I render out the screen-unwrapped texture coordinates into a texture as a template, all the math works out. In order to enhance the bump effect, I added a component to the albedo texture which outlines each bump area. It works pretty well.

I decided to add glowing mordor writing on the outside of the token to make it the "one token of power." The glow is based upon the sine of the application time and the mask is defined by a texture modified from Wikipedia. One trick which made this a lot easier was to unwrap the object into screen space and save the frame buffer into a file. Saving the frame buffer is literally two function calls in D3D, so this was trivial to implement but allowed me to then paint on the output texture, knowing where on the model it would end up.

Video (~4MB) of the final project.

Status: Completed

|

|

Precomputed Radiance Transfer Fur over Arbitrary Surfaces

|

|

Project Type: Undergraduate Thesis

Description:

For my undergraduate thesis I developed a technique for rendering realistic "global" lighting effects for fur in realtime. "Global" in this context refers to light interactions between hairs in a patch of fur, and not light interactions between seperate parts of a furry model, such as a foot and head. This project was an extension of Real-Time Fur with Precomputed Radiance Transfer below to allow pasting the patch of fur over a 3D model.

One of the distavantages of Precomputed Radiance Transfer techniques based upon spherical harmonics is the complex rotation matrices for the spherical harmonic basis functions. In general, rotating a spherical function in the spherical harmonic basis is too computationally expensive to perform in a vertex or pixel shader on graphics hardware. Because Real-Time Fur with Precomputed Radiance Transfer stores the lighting effects of fur into spherical harmonics, the patch of fur which is lit cannot be rotated into the tangent space of a model, and, consequently, cannot be rendered over an arbitrary model. This project fixes this by only using a subset of the spherical harmonics known as the zonal harmonics to encode the light transfer. Zonal harmonic functions can be rotated easily, and a series of zonal harmonic basis functions rotated in different directions can approximate an arbitrary spherical harmonic function very closely.

Project proposal (PDF)

Final Paper for the project in PDF form.

Status: Completed

|

|

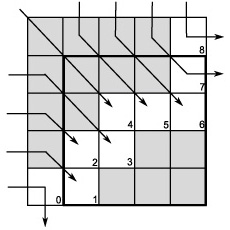

Real-Time Fur with Precomputed Radiance Transfer

|

|

Project Type: Class Project for Advanced Computer Graphics (CMSC635)

Date: Fall semester, 2005

Description:

This was a project for Advanced Computer Graphics (CMSC635) and involved creating and lighting a small patch of fur.

The fur model was a set of toroidally tiling semi-transparent shells, where the opacity was set by the density of the fur at that spot. I wrote a particle system to trace out the path of each hair though the volume, where each particle left a `density trace' where it passed in an anti-aliased manner.

Global lighting was precomputed using a ray-caster which I implemented for the project. The Spherical Harmonics are a set of functions defined on a sphere which form a basis for all spherical functions. By pre-comuting the lighting for my fur model using the first 36 spherical harmonic functions, I was able to approximate the lighting for any distant lighting environment. My ray-caster worked for positive and negative light, and computed the lighting for each voxel in the fur model in all lighting environments in ~30 minutes with around 100 samples on the sphere.

Re-creating the final lighting for each voxel was done in a pixel shader on an NVIDIA 6800 GPU. All the Spherical Harmonic values which had been computed were stored in textures which the CG shader looked up and recombined to represent the lighting.

A video (~1.5MB) of the fur model. This image shows a 128x128x32 volume of fur created with the particle system.

Video (~4MB) of the final project, with global lighting. The begining of the video shows several of the Spherical Harmonic functions.

Final Paper for the project in PDF form.

Status: Completed

|

|

|