CMSC 671 - Fall 2005

Homework #6

Out Tuesday 11/29/05

Due Tuesday 12/13/05

1. Decision Trees (15 pts.)

Suppose that an attribute splits the set of examples E into subsets

Ei, and that each subset has pi positive

examples and ni negative examples. Show that unless

the ratio

pi / (pi+n)

is the same for all i, the attribute has strictly positive information

gain.

2. Learning Bayes Nets (15 pts)

Russell & Norvig, Exercise 20.9. Consider an arbitrary Bayesian

network, a complete data set for that network, and the likelihood for the

data set according to the network. Give a simple proof (i.e., in words)

that the likelihood of the data cannot decrease if we add a new link to the

network and recompute the meaximum-likelihood parameter values.

3. Reinforcement Learning (40 pts)

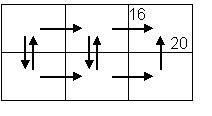

Consider the following deterministic grid world. Allowable moves are

shown by arrows; the numbers indicate the reward for performing an action.

(Unmarked arrows mean that the action yields zero reward/penalty.)

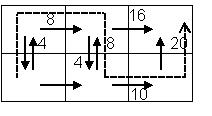

(a) Given current values for Q shown below, show all of the changes in the Q

estimates when the agent takes the path shown by the dotted line. (Note

that the path starts in the lower left cell, and ends in the upper right

cell.) Use gamma=.5.

(b) Show all of the final optimal Q values for gamma=0.5 and for gamma=0.9.

(Mark these values on two copies of the grid.)

(c) Given your Q values above, show all of the V* values for each state, and

mark one optimal policy (action to take in each cell), for gamma=0.5 and

for gamma=0.9.

4. Nash Equilibria (15 pts)

Russell & Norvig, Exercise 17.9. Show that a dominant strategy

equilibrium is always a Nash equilibrium, but not (necessarily) vice versa.

5. Game Theory (15 pts)

Russell & Norvig, Exercise 17.11. Solve the game of three-finger

Morra.