Computer Science and Electrical Engineering

University of Maryland, Baltimore County

Ph.D. Dissertation Defense

Private Packet Filtering Searching for Sensitive Indicators

without Revealing the Indicators in Collaborative Environments

Michael John Oehler

10:30-12:30 Thursday, 21 November 2013, ITE 325

University of Maryland, Baltimore County

without Revealing the Indicators in Collaborative Environments

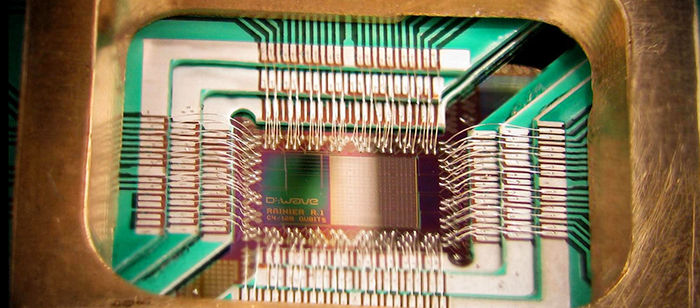

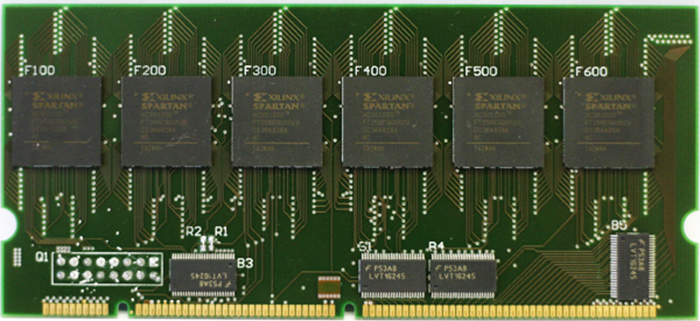

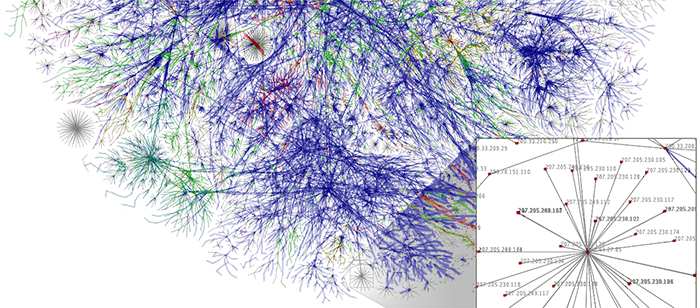

Private Packet Filtering (PPF) is a new capability that preserves the confidentiality of sensitive attack indicators, and retrieves network packets that match those indicators without revealing the matching packets. The capability is achieved through the definition of a high-level language, the definition of a conjunction operator that expands the breadth of the language, a simulation of the document detection and recovery rates of the output buffer, and through a description of applicable system facets. Fundamentally, PPF uses a private search mechanism that in turn relies on the (partial) homomorphic property of the Paillier cryptosystem. PPF is intended for use in a collaborative environment involving a cyber defender and a partner: The defender has access to a set of sensitive indicators, and is willing to share some of those indicators with the partner. The partner has access to network data, and is willing to share that access. Neither is willing to provide full access. Using the language, the defender creates an encrypted form of the sensitive indicators, and passes the encrypted indicators to the partner. The partner then uses the encrypted indicators to filter packets, and returns an encrypted packet capture file. The partner does not decrypt the indicators and cannot identify which packets matched. The defender decrypts, reassembles the matching packets, gains situational awareness, and notifies the partner of any malicious activity. In this sense, the defender reveals only the observed indicator and retains control of all other indicators. PPF allows both parties to gain situational awareness of malicious activity, and to retain control without exposing every indicator or all network data.

Committee: Dhananjay Phatak (chair), Michael Collins, Josiah Dykstra, Russell Fink, John Pinkston and Alan Sherman